Moderating principles

July 25th, 2022

Some time around April 1994, I founded the Computation and Language E-Print Archive, the first preprint repository for a subfield of computer science. It was hosted on Paul Ginsparg’s arXiv platform, which at the time had been hosting only physics papers, built out from the original arXiv repository for high-energy physics theory, hep-th. The repository, cmp-lg (as it was then called), was superseded in 1999 by an open-access preprint repository for all of computer science, the Computing Research Repository (CoRR), which covered a broad range of subject areas, including computation and language. The CoRR organizing committee also decided to host CoRR on arXiv. I switched over to moderating for the CoRR repository from cmp-lg, and have continued to do so for the last – oh my god – 22 years.[1]

Articles in the arXiv are classified with a single primary subject class, and may have other subject classes as secondary. The switchover folded cmp-lg into the arXiv as articles tagged with the cs.CL (computation and language) subject class. I thus became the moderator for cs.CL.

A preprint repository like the arXiv is not a journal. There is no peer review applied to articles. There is essentially no quality control. That is not the role of a preprint repository. The role of a preprint repository is open distribution, not vetting. Nonetheless, some kind of control is needed in making sure that, at the very least, the documents being submitted are in fact scholarly articles and are appropriately tagged as to subfield, and that need has expanded with the dramatic increase in submissions to CoRR over the years. The primary duty of a moderator is to perform this vetting and triage: verifying that a submission possesses the minimum standards for being characterized as a scholarly article, and that it falls within the purview of, say, cs.CL, as a primary or secondary subject class.

I am (along with the other arXiv moderators) thus regularly in the position of having to make decisions as to whether a document is a scholarly article or not. To a large extent, Justice Potter Stewart’s approach works reasonably well for scholarly articles: you know them when you see them. But over time, as more marginal cases come up, I’ve felt that tracking my thinking on the matter would be useful for maintaining consistency in my own practice. And now that I’ve done that for a while, I thought it might be useful to share my approach more broadly. That is the goal of this post.

The following thus constitutes (some of) the de facto policies that I use in making decisions as the moderator for the cs.CL collection in the CoRR part of the arXiv repository. I emphasize that these are my policies, not those of CoRR or the moderators of other CoRR subjects. (The arXiv folks themselves provide a more general guide for arXiv moderators.) Read the rest of this entry »

WWHD?

April 21st, 2017

|

| …personal role model… Image of Harry Lewis courtesy of Harvard John A. Paulson School of Engineering and Applied Sciences |

This past Wednesday, April 19, was a celebration of computer science at Harvard, in honor of the 70th birthday of my undergraduate adviser, faculty colleague, former Dean of Harvard College, baseball aficionado, and personal role model Harry Lewis. The session lasted all day, with talks and reminiscences from many of Harry’s past students, myself included. For those interested, my brief remarks on the topic of “WWHD?” (What Would Harry Do?) can be found in the video of the event.

By the way, the “Slow Down” memo that I quoted from is available from Harry’s website. I recommend it for every future college first-year student.

Upcoming in Tromsø

November 11th, 2015

|

| Northern lights over Tromsø |

I’ll be visiting Tromsø, Norway to attend the Tenth Annual Munin Conference on Scholarly Publishing, which is being held November 30 to December 1. I’m looking forward to the talks, including keynotes from Randy Schekman and Sabine Hossenfelder and an interview by Caroline Sutton of my colleague Peter Suber, director of Harvard’s Office for Scholarly Communication. My own keynote will be on “The role of higher education institutions in scholarly publishing and communication”. Here’s the abstract:

Institutions of higher education are in a double bind with respect to scholarly communication: On the one hand, they need to support the research needs of their students and researchers by providing access to the journals that comprise the archival record of scholarship. Doing so requires payment of substantial subscription fees. On the other hand, they need to provide the widest possible dissemination of works by those same researchers — the fruits of that very research — which itself incurs costs. I address how these two goals, each of which demands outlays of substantial funds, can best be honored. In the course of the discussion, I provide a first look at some new results on predicting journal usage, which allows for optimizing subscriptions.

Update: The video of my talk at the Munin conference is now available.

Whence function notation?

September 28th, 2015

I begin — in continental style, unmotivated and, frankly, gratuitously — by defining Ackerman’s function \(A\) over two integers:

\[ A(m, n) = \left\{ \begin{array}{l}

n + 1 & \mbox{ if $m=0$ } \\

A(m-1, 1) & \mbox{ if $m > 0$ and $n = 0$ } \\

A(m-1, A(m, n-1)) & \mbox{ if $m > 0$ and $n > 0$ }

\end{array} \right. \]

|

| …drawing their equations evanescently in dust and sand… Image of “Death of Archimedes” from Charles F. Horne, editor, Great Men and Famous Women, Volume 3, 1894. Reproduced by Project Gutenberg. Used by permission. |

You’ll have appreciated (unconsciously no doubt) that this definition makes repeated use of a notation in which a symbol precedes a parenthesized list of expressions, as for example \(f(a, b, c)\). This configuration represents the application of a function to its arguments. But you knew that. And why? Because everyone who has ever gotten through eighth grade math has been taught this notation. It is inescapable in high school algebra textbooks. It is a standard notation in the most widely used programming languages. It is the very archetype of common mathematical knowledge. It is, for God’s sake, in the Common Core. It is to mathematicians as water is to fish — so encompassing as to be invisible.

Something so widespread, so familiar — it’s hard to imagine how it could be otherwise. It’s difficult to un-see it as anything but function application. But it was not always thus. Someone must have invented this notation, some time in the deep past. Perhaps it came into being when mathematicians were still drawing their equations evanescently in dust and sand. Perhaps all record has been lost of that ur-application that engendered all later function application expressions. Nonetheless, someone must have come up with the idea.

|

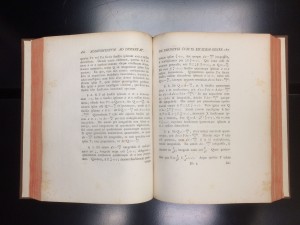

| …that ur-application… Photo from the author. |

Surprisingly, the origins of the notation are not shrouded in mystery. The careful and exhaustive scholarship of mathematical historian Florian Cajori (1929, page 267) argues for a particular instance as originating the use of this now ubiquitous notation. Leonhard Euler, the legendary mathematician and perhaps the greatest innovator in successful mathematical notations, proposed the notation first in 1734, in Section 7 of his paper “Additamentum ad Dissertationem de Infinitis Curvis Eiusdem Generis” [“An Addition to the Dissertation Concerning an Infinite Number of Curves of the Same Kind”].

The paper was published in 1740 in Commentarii Academiae Scientarium Imperialis Petropolitanae [Memoirs of the Imperial Academy of Sciences in St. Petersburg], Volume VII, covering the years 1734-35. A visit to the Widener Library stacks produced a copy of the volume, letterpress printed on crisp rag paper, from which I took the image shown above of the notational innovation.

Here is the pertinent sentence (with translation by Ian Bruce.):

Quocirca, si \(f\left(\frac{x}{a} +c\right)\) denotet functionem quamcunque ipsius \(\frac{x}{a} +c\) fiet quoque \(dx − \frac{x\, da}{a}\) integrabile, si multiplicetur per \(\frac{1}{a} f\left(\frac{x}{a} + c\right)\).

[On account of which, if \(f\left(\frac{x}{a} +c\right)\) denotes some function of \(\frac{x}{a} +c\), it also makes \(dx − \frac{x\, da}{a}\) integrable, if it is multiplied by \(\frac{1}{a} f\left(\frac{x}{a} + c\right)\).]

There is the function symbol — the archetypal \(f\), even then, to evoke the concept of function — followed by its argument corralled within simple curves to make clear its extent.

It’s seductive to think that there is an inevitability to the notation, but this is an illusion, following from habit. There are alternatives. Leibniz for instance used a boxy square-root-like diacritic over the arguments, with numbers to pick out the function being applied: \( \overline{a; b; c\,} \! | \! \lower .25ex {\underline{\,{}^1\,}} \! | \), and even Euler, in other later work, experimented with interposing a colon between the function and its arguments: \(f : (a, b, c)\). In the computing world, “reverse Polish” notation, found on HP calculators and the programming languages Forth and Postscript, has the function symbol following its arguments: \(a\,b\,c\,f\), whereas the quintessential functional programming language Lisp parenthesizes the function and its arguments: \((f\ a\ b\ c)\).

Finally, ML and its dialects follow Church’s lambda calculus in merely concatenating the function and its (single) argument — \(f \, a\) — using parentheses only to disambiguate structure. But even here, Euler’s notation stands its ground, for the single argument of a function might itself have components, a ‘tuple’ of items \(a\), \(b\), and \(c\) perhaps. The tuples might be indicated using an infix comma operator, thus \(a,b,c\). Application of a function to a single tuple argument can then mimic functions of multiple arguments, for instance, \(f (a, b, c)\) — the parentheses required by the low precedence of the tuple forming operator — and we are back once again to Euler’s notation. Clever, no? Do you see the lengths to which people will go to adhere to Euler’s invention? As much as we might try new notational ideas, this one has staying power.

References

Florian Cajori. 1929. A History of Mathematical Notations, Volume II. Chicago: Open Court Publishing Company.

Leonhard Euler. 1734. Additamentum ad Dissertationem de Infinitis Curvis Eiusdem Generis. In Commentarii Academiae Scientarium Imperialis Petropolitanae, Volume VII (1734–35), pages 184–202, 1740.

Binary search in the Old Testament

August 10th, 2015

The lot is cast into the lap,

but its every decision is from the Lord.

(NIV Proverbs 16:33)

|

| …“Lux et Veritas”… Seal of Yale University image from Wikimedia Commons. |

The seal of Yale University shows a book with the Hebrew אורים ותמים (urim v’thummim), a reference to the Urim and Thummim of the Old Testament. The Urim and Thummim were tools of divination. They show up first in Exodus:

Also put the Urim and the Thummim in the breastpiece, so they may be over Aaron’s heart whenever he enters the presence of the Lord. Thus Aaron will always bear the means of making decisions for the Israelites over his heart before the Lord. (NIV Exodus 28:30; emphasis added)

Apparently, the Urim and Thummim worked like flipping a coin, providing one bit of information, a single binary choice communicated from God.1 Translations of Urim as “guilty” and Thummim as “innocent” indicate that the divination was used to determine guilt: “Thummim you win; Urim you lose.” An alternate translation has Urim “light” and Thummim “truth”, hence “Lux et Veritas” in the Yale seal’s banner.

Saul, King of Israel and father-in-law of King David, uses the binary choice provided by Urim and Thummim divination in 1 Samuel to unmask the party who violated the king’s oath:

Then Saul prayed to the Lord, the God of Israel, “Why have you not answered your servant today? If the fault is in me or my son Jonathan, respond with Urim, but if the men of Israel are at fault, respond with Thummim.” Jonathan and Saul were taken by lot, and the men were cleared. Saul said, “Cast the lot between me and Jonathan my son.” And Jonathan was taken. (NIV2 1 Samuel 14:41-42)

Saul executes a small (and highly unbalanced) binary search. He first divides the population into two parts. He and his son Jonathan are assigned Urim and all the rest get Thummim. God responds with Urim. Then to decide between Saul and Jonathan, the process is repeated, and Jonathan is fingered as the guilty party. (The method apparently works; the preceding verses of 1 Samuel give the story of Jonathan’s transgression.)

The universality of binary as an information conveying method has a longer history than one might have thought.

- The Old Testament Urim and Thummim should not be confused with the “higher bandwidth” device of the same name that Joseph Smith claimed to use to receive the translation of a now lost 116 pages of the Book of Mormon. This device purportedly resembled a pair of spectacles with transparent rocks for lenses, a kind of “oraculus rift”. For the extant Book of Mormon, Smith changed his method to scrying with a “seer stone” placed in his hat. A photograph of the stone, coincidentally, has just recently been released by the LDS church.↩

- The use of a Septuagint-based version of the Bible, here the New International Version, is important, as this verse is considerably shortened in versions such as the King James based on the Masoretic text, leaving out the use of Urim and Thummim to make a binary decision.↩

Becoming tin men

August 3rd, 2015

From the 2015 introduction to the 1965 novel The Tin Men by Michael Frayn:

“I hadn’t in those days heard of the Turing Test—Alan Turing’s proposal that a computer could be said to think if its conversational powers were shown to be indistinguishable from a human being’s—so I didn’t realise that what I was suggesting was a kind of converse of it: a demotion of human beings to the status of machines if their intellectual performance was indistinguishable from a computer’s, and they become tin men in their turn. The William Morris Institute is about to be visited by the Queen for the opening of a new wing, and I realise with hindsight that I’ve used a similar idea quite often since: the grand event that goes wrong, and deposits the protagonists into the humiliating gulf that so often in life opens between intention and achievement. My characters at the Institute could have written a story programme for me and saved me a lot of work. I’ve become a bit of a tin man myself.”

Plain meaning

June 26th, 2015

In its reporting on yesterday’s Supreme Court ruling in King v. Burwell, Vox’s Matthew Yglesias makes the important point that Justice Scalia’s dissent is based on a profound misunderstanding of how language works. Justice Scalia would have it that “words no longer have meaning if an Exchange that is not established by a State is ‘established by the state.’” The Justice is implicitly appealing to a “plain meaning” view of legislation: courts should just take the plain meaning of a law and not interpret it.

If only that were possible. If you think there’s such a thing as acquiring the “plain meaning” of a text without performing any interpretive inference, you don’t understand how language works. It’s the same mistake that fundamentalists make when they talk about looking to the plain meaning of the Bible. (And which Bible would that be anyway? The King James Version? Translation requires the same kind of inferential process – arguably the same actual process – as extracting meaning through reading.)

Yglesias describes “What Justice Scalia’s King v. Burwell dissent gets wrong about words and meaning” this way:

Individual stringz of letterz r efforts to express meaningful propositions in an intelligible way. To succeed at this mission does not require the youse of any particular rite series of words and, in fact, a sntnce fll of gibberish cn B prfctly comprehensible and meaningful 2 an intelligent reader. To understand a phrse or paragraf or an entire txt rekwires the use of human understanding and contextual infrmation not just a dctionry.

The jokey orthography aside, this observation that understanding the meaning of linguistic utterances requires the application of knowledge and inference is completely uncontroversial to your average linguist. Too bad Supreme Court justices don’t defer to linguists on how language works.

Let’s take a simple example, the original “Winograd sentences” from back in 1973:

- The city councilmen refused the demonstrators a permit because they feared violence.

- The city councilmen refused the demonstrators a permit because they advocated violence.

To understand these sentences, to recover their “plain meaning”, requires resolving to whom the pronoun ‘they’ refers. Is it the city councilmen or the demonstrators? Clearly, it is the former in sentence (1) and the latter in sentence (2). How do you know, given that the two sentences differ only in the single word alternation ‘feared’/‘advocated’? The recovery of this single aspect of the “plain meaning” of the sentence requires an understanding of how governmental organizations work, how activists pursue their goals, likely public reactions to various contingent behaviors, and the like, along with application of all that knowledge through plausible inference. The Patient Protection and Affordable Care Act (PPACA) has by my (computer-aided) count some 479 occurrences of pronouns in nominative, accusative, or possessive. Each one of these requires the identification of its antecedent, with all the reasoning that implies, to get its “plain meaning”.

Examining the actual textual subject of controversy in the PPACA demonstrates the same issue. The phrase in question is “established by the state”. The American Heritage Dictionary provides six senses and nine subsenses for the transitive verb ‘establish’, of which (by my lights) sense 1a is appropriate for interpreting the PPACA: “To cause (an institution, for example) to come into existence or begin operating.” An alternative reading might, however, be sense 4: “To introduce and put (a law, for example) into force.” The choice of which sense is appropriate requires some reasoning of course about the context in which it was used, the denotata of the subject and object of the verb for instance. If one concludes that sense 1a was intended, then the Supreme Court’s decision is presumably correct, since a state’s formal relegation to the federal government the role of running the exchange is an act of “causing to come into existence”, although perhaps not an act of “introducing and putting into force”. (Or further explication of the notions of “causing” or “introducing” might be necessary to decide the matter.) If the latter sense 4 were intended, then perhaps the Supreme Court was wrong in its recent decision. The important point is this: There is no possibility of deferring to the “plain meaning” on the issue; one must reason about the intentions of the authors to acquire even the literal meaning of the text. This process is exactly what Chief Justice Roberts undertakes in his opinion. Justice Scalia’s view, that plain meaning is somehow available without recourse to the use of knowledge and reasoning, is unfounded even in the simplest of cases.

In support of behavioral tests of intelligence

May 7th, 2015

|

| …“blockhead” argument… “Blockhead by Paul McCarthy @ Tate Modern” image from flickr user Matt Hobbs. Used by permission. |

Alan Turing proposed what is the best known criterion for attributing intelligence, the capacity for thinking, to a computer. We call it the Turing Test, and it involves comparing the computer’s verbal behavior to that of people. If the two are indistinguishable, the computer passes the test. This might be cause for attributing intelligence to the computer.

Or not. The best argument against a behavioral test of intelligence (like the Turing Test) is that maybe the exhibited behaviors were just memorized. This is Ned Block’s “blockhead” argument in a nutshell. If the computer just had all its answers literally encoded in memory, then parroting those memorized answers is no sign of intelligence. And how are we to know from a behavioral test like the Turing Test that the computer isn’t just such a “memorizing machine”?

In my new(ish) paper, “There can be no Turing-Test–passing memorizing machines”, I address this argument directly. My conclusion can be found in the title of the article. By careful calculation of the information and communication capacity of space-time, I show that any memorizing machine could pass a Turing Test of no more than a few seconds, which is no Turing Test at all. Crucially, I make no assumptions beyond the brute laws of physics. (One distinction of the article is that it is one of the few philosophy articles in which a derivative is taken.)

The article is published in the open access journal Philosophers’ Imprint, and is available here along with code to computer-verify the calculations.

The two Guildford mathematicians

February 18th, 2015

|

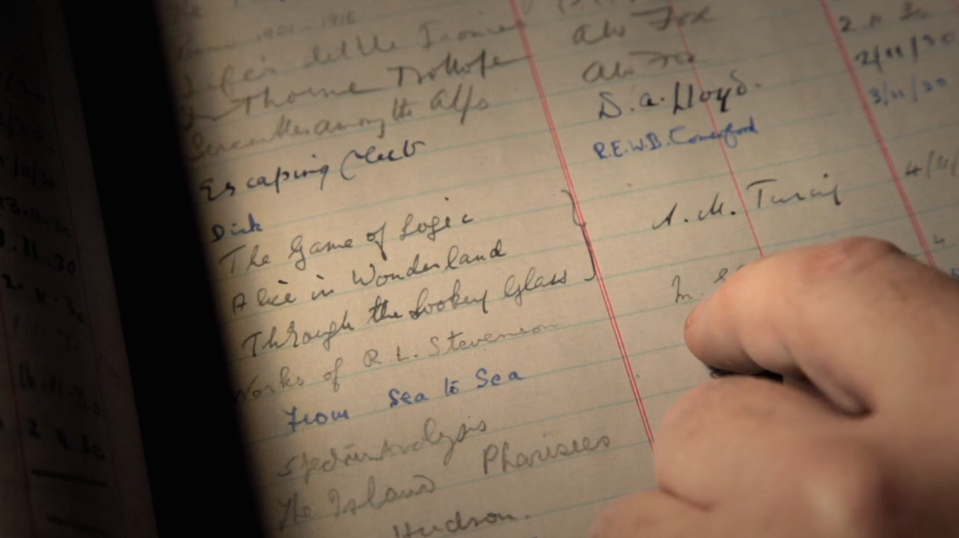

| …the huge ledger… Still from Codebreaker showing Turing’s checkout of three Carroll books. |

The charming town of Guildford, 40 minutes southwest of London on South West Trains, is associated with two famous British logician-mathematicians. Alan Turing (on whom I seem to perseverate) spent time there after 1927, when his parents purchased a home at 22 Ennismore Avenue just outside the Guildford town center. Although away at his boarding school, the Sherborne School in Dorset, which he attended from 1926 to 1931, Turing spent school holidays at the family home in Guildford. The house bears a blue plaque commemorating the connection with Turing, the “founder of computer science” as it aptly describes him, which you can see in the photo at right, taken on a pilgrimage I took this past June.

|

| …the family home… The Turing residence at 22 Ennismore Avenue, Guildford |

And this brings us to the second famous Guildford mathematician, who it turns out Turing was reading while at Sherborne. In the Turing docudrama Codebreaker, one of Turing’s biographers David Leavitt visits Sherborne and displays the huge ledger used for the handwritten circulation records of the Sherborne School library. There (Leavitt remarks), in an entry dated 11 April 1930, Turing has checked out three books, including Alice in Wonderland and Through the Looking Glass, and What Alice Found There. (We’ll come back to the third book shortly.) The books were, of course, written by the Oxford mathematics don Charles Lutwidge Dodgson under his better known pen name Lewis Carroll. Between the 1865 and 1871 publications of these his two most famous works, Carroll leased “The Chestnuts” in Guildford in 1868 to serve as a home for his sisters. The house sits at the end of Castle Hill Road adjacent to the Guildford Castle, which is as good a landmark as any to serve as the center of town. Carroll visited The Chestnuts on many occasions over the rest of his life; it was his home away from Christ Church home. He died there 30 years later and was buried at the Guildford Mount Cemetery.

|

| …through the looking glass… Statue of Alice passing through the looking glass, Guildford Castle Park, Guildford |

Guildford plays up its connection to Carroll much more than its Turing link. In the park surrounding Guildford Castle sits a statue of Alice passing through the looking glass, and the adjacent museum devotes considerable space to the Dodgson family. A statue depicting the first paragraphs of Alice’s adventures (Alice, her sister reading next to her, noticing a strange rabbit) sits along the bank of the River Wey. The Chestnuts itself, however, bears no blue plaque nor any marker of its link to Carroll. (A plaque formerly marking the brick gatepost has been removed, evidenced only by the damage to the brick where it had been.)

|

| …The Chestnuts… The Dodgson family home in Guildford |

Who knows whether Turing was aware that Carroll, whose two Alice books he was reading, had had a home a mere mile from where his parents were living. The Sherborne library entry provides yet another convergence between the two British-born, Oxbridge-educated, permanent bachelors with sui generis demeanors, questioned sexualities, and occasional stammers, interested in logic and mathematics.

But there’s more. What of the third book that Turing checked out of the Sherborne library at the same time? Leavitt finds the third book remarkable because the title, The Game of Logic, presages Turing’s later work in logic and the foundations of computer science. What Leavitt doesn’t seem to be aware of is that it is no surprise that this book would accompany the Alice books; it has the same author. Carroll published The Game of Logic in 1886. It serves to make what I believe to be the deepest connection between the two mathematicians, one that has to my knowledge never been noted before.

|

| …Carroll’s own copy… Title page of Lewis Carroll, The Game of Logic, 1886. EC85.D6645.886g, Houghton Library, Harvard University. |

After watching Codebreaker and noting the Game of Logic connection, I decided to refresh my memory about the book. I visited Harvard’s Houghton Library, which happens to have Carroll’s own copy of the book. The title page is shown at right, with the facing page visible showing a sample card to be used in the game. The book was sold together with a copy of the card made of pasteboard and counters of two colors (red and grey) to be used to mark the squares on the card.

The Houghton visit and the handling of the game pieces jogged my memory as to the point of Carroll’s book. Carroll’s goal in The Game of Logic was to describe a system for carrying out syllogistic reasoning that even a child could master. Towards that goal, the system was intended to be completely mechanical. It involved the card marked off in squares and the two types of counters placed on the card in various configurations. Any of a large class of syllogisms over arbitrary properties can be characterized in this way, given a large enough card and enough counters, though it becomes unwieldy quite quickly after just a few.

|

| … marked off in squares… The game card depicting a syllogism. Lewis Carroll, The Game of Logic, 1886. EC85.D6645.886g, Houghton Library, Harvard University. |

(The photo at right shows the card and counters that came with the book. I’ve placed the counters in such a way as to depict the syllogism:

No red apples are unripe. Some wholesome apples are red.

∴ Some ripe apples are red. )

To computer scientists, this ought to sound familiar. Just six years after checking out The Game of Logic from his school library, Turing would publish his groundbreaking paper “On computable numbers”, in which he describes a system for carrying out computations in a way that is completely mechanical. It involves a paper tape marked off in squares, and markings of at least two types placed on the tape in various configurations. Any of a large class of computations over arbitrary values can be characterized in this way, given a large enough tape and enough markings, though it becomes unwieldy quite quickly. We now call this mechanical device with tape and markings a Turing machine, and recognize it as the first universal model of computation. Turing’s paper serves as the premier work in the then nascent field of computer science.

Of course, there are differences both superficial and fundamental between Carroll’s game and Turing’s machine. Carroll’s card is two-dimensional with squares marked off in a lattice pattern, and counters are placed both within the squares and on the edges between squares. Turing’s tape is one-dimensional (though two-dimensional Turing machines have been defined and analyzed) and the markings are placed only within the squares. Most importantly, nothing even approaching the ramifications that Turing developed on the basis of his model came from Carroll’s simple game. (As a mathematician, Carroll was no Turing.) Nonetheless, in a sense the book that Turing read at 17 attempts to do for logic what Turing achieved six years later for computation.

I have no idea whether Lewis Carroll’s The Game of Logic influenced Alan Turing’s thinking about computability. But it serves as perhaps the strongest conceptual bond between Guildford’s two great mathematicians.

Update February 25, 2015: Thanks to Houghton Library Blog for reblogging this post.

The Turing moment

November 30th, 2014

|

| …less histrionic… Ed Stoppard as Alan Turing in Codebreaker |

We seem to be at the “Turing moment”, what with Benedict Cumberbatch, erstwhile Sherlock Holmes, now starring as a Hollywood Alan Turing in The Imitation Game. The release culminates a series of Turing-related events over the last few years. The centennial of Turing’s 1912 birth was celebrated actively in the computer science community as a kind of jubilee, the occasion of numerous conferences, retrospectives, and presentations. Bracketing that celebration, PM Gordon Brown publicly apologized for Britain’s horrific treatment of Turing in 2009, and HRH Queen Elizabeth II, who was crowned a couple of years before Alan Turing took his own life as his escape from her government’s abuse, finally got around to pardoning him in 2013 for the crime of being gay.

I went to see a preview of The Imitation Game at the Coolidge Corner Theatre’s “Science on Screen” series. I had low expectations, and I was not disappointed. The film is introduced as being “based on a true story”, and so it is – in the sense that My Fair Lady was based on the myth of Pygmalion (rather than the Shaw play). Yes, there was a real place called Bletchley Park, and real people named Alan Turing and Joan Clark, but no, they weren’t really like that. Turing didn’t break the Enigma code singlehandedly despite the efforts of his colleagues to stop him. Turing didn’t take it upon himself to control the resulting intelligence to limit the odds of their break being leaked to the enemy. And so on, and so forth. Most importantly, Turing did not attempt to hide his homosexuality from the authorities, and promoting the idea that he did for dramatic effect is, frankly, an injustice to his memory.

Reviewers seem generally to appreciate the movie’s cleaving from reality, though with varying opprobrium. “The truth of history is respected just enough to make room for tidy and engrossing drama,” says A. O. Scott in the New York Times. The Wall Street Journal’s Joe Morgenstern ascribes to the film “a marvelous story about science and humanity, plus a great performance by Benedict Cumberbatch, plus first-rate filmmaking and cinematography, minus a script that muddles its source material to the point of betraying it.” At Slate, Dana Stevens notes that “The true life story of Alan Turing is much stranger, sadder and more troubling than the version of it on view in The Imitation Game, Morton Tyldum’s handsome but overlaundered biopic.”

Of course, they didn’t make the movie for people like me, that is, people who had heard of Alan Turing before. And to the extent that the film contributes to this Turing moment — leading viewers to look further into this most idiosyncratic and important person — it will be a good thing. The Coolidge Corner Theatre event was followed by commentary from Silvio Micali and Seth Lloyd, both professors at MIT. (The former is a recipient of the highest honor in computer science, the Turing Award. Yes, that Turing.) Their comments brought out the many scientific contributions of Turing that were given short shrift in the film. If only they could duplicate their performance at every showing.

Those who become intrigued by the story of Alan Turing could do worse than follow up their viewing of the Cumberbatch vehicle with one of the 2012 docudrama Codebreaker, a less histrionic but far more accurate (and surprisingly, more sweeping) presentation of Turing’s contributions to science and society, and his societal treatment. I had the pleasure of introducing the film and its executive producer Patrick Sammon in a screening at Harvard a couple of weeks ago. The event was another indicator of the Turing moment. (My colleague Harry Lewis has more to say about the film.)

To all of you who are aware of the far-reaching impact of Alan Turing on science, on history, and on society, and the tragedy of his premature death, I hope you will take advantage of the present Turing moment to spread the word about computer science’s central personage.