Becoming tin men

August 3rd, 2015

From the 2015 introduction to the 1965 novel The Tin Men by Michael Frayn:

“I hadn’t in those days heard of the Turing Test—Alan Turing’s proposal that a computer could be said to think if its conversational powers were shown to be indistinguishable from a human being’s—so I didn’t realise that what I was suggesting was a kind of converse of it: a demotion of human beings to the status of machines if their intellectual performance was indistinguishable from a computer’s, and they become tin men in their turn. The William Morris Institute is about to be visited by the Queen for the opening of a new wing, and I realise with hindsight that I’ve used a similar idea quite often since: the grand event that goes wrong, and deposits the protagonists into the humiliating gulf that so often in life opens between intention and achievement. My characters at the Institute could have written a story programme for me and saved me a lot of work. I’ve become a bit of a tin man myself.”

In support of behavioral tests of intelligence

May 7th, 2015

|

| …“blockhead” argument… “Blockhead by Paul McCarthy @ Tate Modern” image from flickr user Matt Hobbs. Used by permission. |

Alan Turing proposed what is the best known criterion for attributing intelligence, the capacity for thinking, to a computer. We call it the Turing Test, and it involves comparing the computer’s verbal behavior to that of people. If the two are indistinguishable, the computer passes the test. This might be cause for attributing intelligence to the computer.

Or not. The best argument against a behavioral test of intelligence (like the Turing Test) is that maybe the exhibited behaviors were just memorized. This is Ned Block’s “blockhead” argument in a nutshell. If the computer just had all its answers literally encoded in memory, then parroting those memorized answers is no sign of intelligence. And how are we to know from a behavioral test like the Turing Test that the computer isn’t just such a “memorizing machine”?

In my new(ish) paper, “There can be no Turing-Test–passing memorizing machines”, I address this argument directly. My conclusion can be found in the title of the article. By careful calculation of the information and communication capacity of space-time, I show that any memorizing machine could pass a Turing Test of no more than a few seconds, which is no Turing Test at all. Crucially, I make no assumptions beyond the brute laws of physics. (One distinction of the article is that it is one of the few philosophy articles in which a derivative is taken.)

The article is published in the open access journal Philosophers’ Imprint, and is available here along with code to computer-verify the calculations.

The two Guildford mathematicians

February 18th, 2015

|

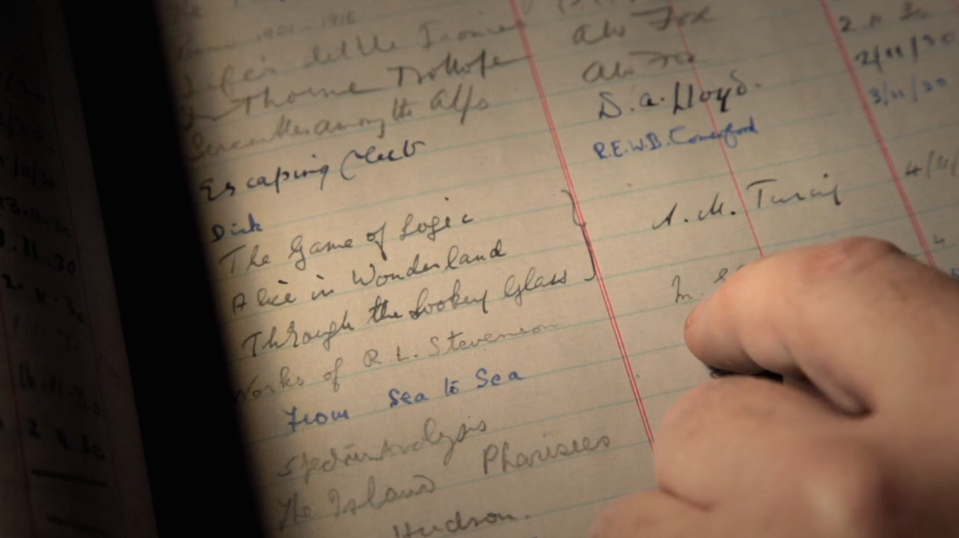

| …the huge ledger… Still from Codebreaker showing Turing’s checkout of three Carroll books. |

The charming town of Guildford, 40 minutes southwest of London on South West Trains, is associated with two famous British logician-mathematicians. Alan Turing (on whom I seem to perseverate) spent time there after 1927, when his parents purchased a home at 22 Ennismore Avenue just outside the Guildford town center. Although away at his boarding school, the Sherborne School in Dorset, which he attended from 1926 to 1931, Turing spent school holidays at the family home in Guildford. The house bears a blue plaque commemorating the connection with Turing, the “founder of computer science” as it aptly describes him, which you can see in the photo at right, taken on a pilgrimage I took this past June.

|

| …the family home… The Turing residence at 22 Ennismore Avenue, Guildford |

And this brings us to the second famous Guildford mathematician, who it turns out Turing was reading while at Sherborne. In the Turing docudrama Codebreaker, one of Turing’s biographers David Leavitt visits Sherborne and displays the huge ledger used for the handwritten circulation records of the Sherborne School library. There (Leavitt remarks), in an entry dated 11 April 1930, Turing has checked out three books, including Alice in Wonderland and Through the Looking Glass, and What Alice Found There. (We’ll come back to the third book shortly.) The books were, of course, written by the Oxford mathematics don Charles Lutwidge Dodgson under his better known pen name Lewis Carroll. Between the 1865 and 1871 publications of these his two most famous works, Carroll leased “The Chestnuts” in Guildford in 1868 to serve as a home for his sisters. The house sits at the end of Castle Hill Road adjacent to the Guildford Castle, which is as good a landmark as any to serve as the center of town. Carroll visited The Chestnuts on many occasions over the rest of his life; it was his home away from Christ Church home. He died there 30 years later and was buried at the Guildford Mount Cemetery.

|

| …through the looking glass… Statue of Alice passing through the looking glass, Guildford Castle Park, Guildford |

Guildford plays up its connection to Carroll much more than its Turing link. In the park surrounding Guildford Castle sits a statue of Alice passing through the looking glass, and the adjacent museum devotes considerable space to the Dodgson family. A statue depicting the first paragraphs of Alice’s adventures (Alice, her sister reading next to her, noticing a strange rabbit) sits along the bank of the River Wey. The Chestnuts itself, however, bears no blue plaque nor any marker of its link to Carroll. (A plaque formerly marking the brick gatepost has been removed, evidenced only by the damage to the brick where it had been.)

|

| …The Chestnuts… The Dodgson family home in Guildford |

Who knows whether Turing was aware that Carroll, whose two Alice books he was reading, had had a home a mere mile from where his parents were living. The Sherborne library entry provides yet another convergence between the two British-born, Oxbridge-educated, permanent bachelors with sui generis demeanors, questioned sexualities, and occasional stammers, interested in logic and mathematics.

But there’s more. What of the third book that Turing checked out of the Sherborne library at the same time? Leavitt finds the third book remarkable because the title, The Game of Logic, presages Turing’s later work in logic and the foundations of computer science. What Leavitt doesn’t seem to be aware of is that it is no surprise that this book would accompany the Alice books; it has the same author. Carroll published The Game of Logic in 1886. It serves to make what I believe to be the deepest connection between the two mathematicians, one that has to my knowledge never been noted before.

|

| …Carroll’s own copy… Title page of Lewis Carroll, The Game of Logic, 1886. EC85.D6645.886g, Houghton Library, Harvard University. |

After watching Codebreaker and noting the Game of Logic connection, I decided to refresh my memory about the book. I visited Harvard’s Houghton Library, which happens to have Carroll’s own copy of the book. The title page is shown at right, with the facing page visible showing a sample card to be used in the game. The book was sold together with a copy of the card made of pasteboard and counters of two colors (red and grey) to be used to mark the squares on the card.

The Houghton visit and the handling of the game pieces jogged my memory as to the point of Carroll’s book. Carroll’s goal in The Game of Logic was to describe a system for carrying out syllogistic reasoning that even a child could master. Towards that goal, the system was intended to be completely mechanical. It involved the card marked off in squares and the two types of counters placed on the card in various configurations. Any of a large class of syllogisms over arbitrary properties can be characterized in this way, given a large enough card and enough counters, though it becomes unwieldy quite quickly after just a few.

|

| … marked off in squares… The game card depicting a syllogism. Lewis Carroll, The Game of Logic, 1886. EC85.D6645.886g, Houghton Library, Harvard University. |

(The photo at right shows the card and counters that came with the book. I’ve placed the counters in such a way as to depict the syllogism:

No red apples are unripe. Some wholesome apples are red.

∴ Some ripe apples are red. )

To computer scientists, this ought to sound familiar. Just six years after checking out The Game of Logic from his school library, Turing would publish his groundbreaking paper “On computable numbers”, in which he describes a system for carrying out computations in a way that is completely mechanical. It involves a paper tape marked off in squares, and markings of at least two types placed on the tape in various configurations. Any of a large class of computations over arbitrary values can be characterized in this way, given a large enough tape and enough markings, though it becomes unwieldy quite quickly. We now call this mechanical device with tape and markings a Turing machine, and recognize it as the first universal model of computation. Turing’s paper serves as the premier work in the then nascent field of computer science.

Of course, there are differences both superficial and fundamental between Carroll’s game and Turing’s machine. Carroll’s card is two-dimensional with squares marked off in a lattice pattern, and counters are placed both within the squares and on the edges between squares. Turing’s tape is one-dimensional (though two-dimensional Turing machines have been defined and analyzed) and the markings are placed only within the squares. Most importantly, nothing even approaching the ramifications that Turing developed on the basis of his model came from Carroll’s simple game. (As a mathematician, Carroll was no Turing.) Nonetheless, in a sense the book that Turing read at 17 attempts to do for logic what Turing achieved six years later for computation.

I have no idea whether Lewis Carroll’s The Game of Logic influenced Alan Turing’s thinking about computability. But it serves as perhaps the strongest conceptual bond between Guildford’s two great mathematicians.

Update February 25, 2015: Thanks to Houghton Library Blog for reblogging this post.

The Turing moment

November 30th, 2014

|

| …less histrionic… Ed Stoppard as Alan Turing in Codebreaker |

We seem to be at the “Turing moment”, what with Benedict Cumberbatch, erstwhile Sherlock Holmes, now starring as a Hollywood Alan Turing in The Imitation Game. The release culminates a series of Turing-related events over the last few years. The centennial of Turing’s 1912 birth was celebrated actively in the computer science community as a kind of jubilee, the occasion of numerous conferences, retrospectives, and presentations. Bracketing that celebration, PM Gordon Brown publicly apologized for Britain’s horrific treatment of Turing in 2009, and HRH Queen Elizabeth II, who was crowned a couple of years before Alan Turing took his own life as his escape from her government’s abuse, finally got around to pardoning him in 2013 for the crime of being gay.

I went to see a preview of The Imitation Game at the Coolidge Corner Theatre’s “Science on Screen” series. I had low expectations, and I was not disappointed. The film is introduced as being “based on a true story”, and so it is – in the sense that My Fair Lady was based on the myth of Pygmalion (rather than the Shaw play). Yes, there was a real place called Bletchley Park, and real people named Alan Turing and Joan Clark, but no, they weren’t really like that. Turing didn’t break the Enigma code singlehandedly despite the efforts of his colleagues to stop him. Turing didn’t take it upon himself to control the resulting intelligence to limit the odds of their break being leaked to the enemy. And so on, and so forth. Most importantly, Turing did not attempt to hide his homosexuality from the authorities, and promoting the idea that he did for dramatic effect is, frankly, an injustice to his memory.

Reviewers seem generally to appreciate the movie’s cleaving from reality, though with varying opprobrium. “The truth of history is respected just enough to make room for tidy and engrossing drama,” says A. O. Scott in the New York Times. The Wall Street Journal’s Joe Morgenstern ascribes to the film “a marvelous story about science and humanity, plus a great performance by Benedict Cumberbatch, plus first-rate filmmaking and cinematography, minus a script that muddles its source material to the point of betraying it.” At Slate, Dana Stevens notes that “The true life story of Alan Turing is much stranger, sadder and more troubling than the version of it on view in The Imitation Game, Morton Tyldum’s handsome but overlaundered biopic.”

Of course, they didn’t make the movie for people like me, that is, people who had heard of Alan Turing before. And to the extent that the film contributes to this Turing moment — leading viewers to look further into this most idiosyncratic and important person — it will be a good thing. The Coolidge Corner Theatre event was followed by commentary from Silvio Micali and Seth Lloyd, both professors at MIT. (The former is a recipient of the highest honor in computer science, the Turing Award. Yes, that Turing.) Their comments brought out the many scientific contributions of Turing that were given short shrift in the film. If only they could duplicate their performance at every showing.

Those who become intrigued by the story of Alan Turing could do worse than follow up their viewing of the Cumberbatch vehicle with one of the 2012 docudrama Codebreaker, a less histrionic but far more accurate (and surprisingly, more sweeping) presentation of Turing’s contributions to science and society, and his societal treatment. I had the pleasure of introducing the film and its executive producer Patrick Sammon in a screening at Harvard a couple of weeks ago. The event was another indicator of the Turing moment. (My colleague Harry Lewis has more to say about the film.)

To all of you who are aware of the far-reaching impact of Alan Turing on science, on history, and on society, and the tragedy of his premature death, I hope you will take advantage of the present Turing moment to spread the word about computer science’s central personage.

No, the Turing Test has not been passed.

June 10th, 2014

|

| …that’s not Turing’s Test… “Turing Test” image from xkcd. Used by permission. |

There has been a flurry of interest in the Turing Test in the last few days, precipitated by a claim that (at last!) a program has passed the Test. The program in question is called “Eugene Goostman” and the claim is promulgated by Kevin Warwick, a professor of cybernetics at the University of Reading and organizer of a recent chatbot competition there.

The Turing Test is a topic that I have a deep interest in (see this, and this, and this, and this, and, most recently, this), so I thought to give my view on Professor Warwick’s claim “We are therefore proud to declare that Alan Turing’s Test was passed for the first time on Saturday.” The main points are these. The Turing Test was not passed on Saturday, and “Eugene Goostman” seems to perform qualitatively about as poorly as many other chatbots in emulating human verbal behavior. In summary: There’s nothing new here; move along.

First, the Turing Test that Turing had in mind was a criterion of indistinguishability in verbal performance between human and computer in an open-ended wide-ranging interaction. In order for the Test to be passed, judges had to perform no better than chance in unmasking the computer. But in the recent event, the interactions were quite time-limited (only five minutes) and in any case, the purported Turing-Test-passing program was identified correctly more often than not by the judges (almost 70% of the time in fact). That’s not Turing’s test.

Update June 17, 2014: The time limitation was even worse than I thought. According to my colleague Luke Hunsberger, computer science professor at Vassar College, who was a judge in this event, the five minute time limit was for two simultaneous interactions. Further, there were often substantial response delays in the system. In total, he estimated that a judge might average only four or five rounds of chat with each interlocutor. I’ve argued before that a grossly time-limited Turing Test is no Turing Test at all.

Sometimes, people trot out the prediction from Turing’s seminal 1950 Mind article that “I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about \(10^9\), to make them play the imitation game so well that an average interrogator will not have more than 70 per cent. chance of making the right identification after five minutes of questioning.” As I explain in my book on the Test:

The first thing to note about the prediction is that it is not a prediction about the Test per se: Turing expects 70 percent prediction accuracy, not the more difficult 50 percent expected by chance, and this after only a limited conversation of five minutes. He is therefore predicting passage of a test much simpler than the Test.

Not only does the prediction not presuppose a full Turing Test, but it could well be argued that it had already come to pass with the advent of Joseph Weizenbaum’s Eliza some thirty-five years early. Weizenbaum developed a simple computer program that attempted to imitate the parroting conversational style of a Rogerian psychotherapist…. Although the methods used were quite simple – repeating the user’s question after adjusting some pronouns, throwing in a stock phrase every now and then – the result was, in its own way, extremely convincing.

Second, “Eugene Goostman” uses the very techniques that began with Weizenbaum’s “Eliza” program from the 1960’s. We see the same tricks – repeating the judge’s statements with simple substitutions, keyword-triggered responses, falling back on vague or unresponsive replies, and the like. Those tricks are no more successful than they have been in the two decades of runnings of the Loebner Prize Competition, another ill-conceived attempt at running a Turing-like test. And there too, entrants used the trick of having their programs emulate humans with built in excuses. “Eugene Goostman” purports to be a non-English-fluent child, rather than a fluent adult.1

In fact, anyone with the slightest familiarity with chatbots, the modern incarnations of “Eliza”, could unmask “Eugene Goostman” quickly. (The judges at the recent competition were apparently not so familiar.) Scott Aaronson has provided a transcript of his own interaction with “Eugene Goostman”, which shows the telltale signs as soon as the computer’s first reply, which blatantly ignores an utterly trivial question and tries to change the subject:

Scott: Which is bigger, a shoebox or Mount Everest?

Eugene: I can’t make a choice right now. I should think it out later. And I forgot to ask you where you are from…

Here’s an appeal to cute, vague language:

Scott: How many legs does a camel have?

Eugene: Something between 2 and 4. Maybe, three? :-))) By the way, I still don’t know your specialty – or, possibly, I’ve missed it?

And here’s the repetition of a canned response:

Scott: No, I need to know that you’re not a chatbot. Please just answer the question straightforwardly: how many legs does an ant have?

Eugene: Something between 2 and 4. Maybe, three? :-))) Oh, what a fruitful conversation;-)

Here’s a canned keyword-triggered response, nonresponsive as usual:

Scott: In your opinion, does your existence demonstrate any idea or principle that wasn’t demonstrated just as convincingly by ELIZA, Joseph Weizenbaum’s chatbot from the 1960s?

Eugene: Eliza was a break-thru. All the bots after it were nothing but weak parodies, claiming to have “revolutionary improvements”.

And here’s “Eliza”‘s trick of rewriting the judge’s utterance after pronoun replacement and (poorly executed) synonym substitution:

Scott: OK, I’ll count that as the second sensible thing you’ve said (grading generously). Don’t you think that a more convincing chatbot could be created, by using Google to trawl the entire web for plausible-sounding answers (analogous to what IBM’s Watson did)?

Eugene: Yes, I do think that a more convincing chatbot can be built by using google to trawl the all web for plausible – sounding – I hope I have right to have my own opinion. Maybe, let’s talk about something else? What would you like to discuss?

Literally every one of “Eugene”‘s responses reflects its “Eliza”-like programming. It would be amusing, if it weren’t so predictable.

In summary, “Eugene Goostman” is not qualitatively superior to other chatbots, and certainly has not passed a true Turing Test. It isn’t even close.

- In a parody of this approach, the late John McCarthy, professor of computer science at Stanford University and inventor of the term “artifical intelligence”, wrote a letter to the editor responding to a publication about an “Eliza”-like program that claimed to emulate a paranoid psychiatric patient. He presented his own experiments that I described in my Turing Test book: “He had designed an even better program, which passed the same test. His also had the virtue of being a very inexpensive program, in these times of tight money. In fact you didn’t even need a computer for it. All you needed was an electric typewriter. His program modeled infantile autism. And the transcripts – you type in your questions, and the thing just sits there and hums – cannot be distinguished by experts from transcripts of real conversations with infantile autistic patients.”↩

Aaron Swartz’s legacy

January 13th, 2013

Government zealotry in prosecuting brilliant people is a repeating theme. It gave rise to one of the great intellectual tragedies of the 20th century, the death of Alan Turing after his appalling treatment by the British government. Sadly, we have just been presented with another case. Aaron Swartz committed suicide at his apartment in New York this week in the face of an overreaching prosecution of his JSTOR download action. I never met him, but I understand from those who knew him well that he was a brilliant, committed person who only acted intending to do good in the world. I’m on the record disagreeing with the particulars of the open access tactic for which he was being prosecuted, on the basis that it was counterproductive. But I empathize with the gut instinct that led to his effort. I hope that it will inspire us all to redouble our efforts to eliminate the needless restraints on the distribution and use of scholarship as Swartz himself was trying to achieve.

Talmud and the Turing Test

June 16th, 2012

|

| …the Golem… Image of the statue of the Golem of Prague at the entrance to the Jewish Quarter of Prague by flickr user D_P_R. Used by permission (CC-BY 2.0). |

Alan Turing, the patron saint of computer science, was born 100 years ago this week (June 23). I’ll be attending the Turing Centenary Conference at University of Cambridge this week, and am honored to be giving an invited talk on “The Utility of the Turing Test”. The Turing Test was Alan Turing’s proposal for an appropriate criterion to attribute intelligence (that is, capacity for thinking) to a machine: you verify through blinded interactions that the machine has verbal behavior indistinguishable from a person.

In preparation for the talk, I’ve been looking at the early history of the premise behind the Turing Test, that language plays a special role in distinguishing thinking from nonthinking beings. I had thought it was an Enlightenment idea, that until the technological advances of the 16th and 17th centuries, especially clockwork mechanisms, the whole question of thinking machines would never have entertained substantive discussion. As I wrote earlier,

Clockwork automata provided a foundation on which one could imagine a living machine, perhaps even a thinking one. In the midst of the seventeenth-century explosion in mechanical engineering, the issue of the mechanical nature of life and thought is found in the philosophy of Descartes; the existence of sophisticated automata made credible Descartes’s doctrine of the (beast-machine), that animals were machines. His argument for the doctrine incorporated the first indistinguishability test between human and machine, the first Turing test, so to speak.

But I’ve seen occasional claims here and there that there is in fact a Talmudic basis to the Turing Test. Could this be true? Was the Turing Test presaged, not by centuries, but by millennia?

Uniformly, the evidence for Talmudic discussion of the Turing Test is a single quote from Sanhedrin 65b.

Rava said: If the righteous wished, they could create a world, for it is written, “Your iniquities have been a barrier between you and your God.” For Rava created a man and sent him to R. Zeira. The Rabbi spoke to him but he did not answer. Then he said: “You are [coming] from the pietists: Return to your dust.”

Rava creates a Golem, an artificial man, but Rabbi Zeira recognizes it as nonhuman by its lack of language and returns it to the dust from which it was created.

This story certainly describes the use of language to unmask an artificial human. But is it a Turing Test precursor?

It depends on what one thinks are the defining aspects of the Turing Test. I take the central point of the Turing Test to be a criterion for attributing intelligence. The title of Turing’s seminal Mind article is “Computing Machinery and Intelligence”, wherein he addresses the question “Can machines think?”. Crucially, the question is whether the “test” being administered by Rabbi Zeira is testing the Golem for thinking, or for something else.

There is no question that verbal behavior can be used to test for many things that are irrelevant to the issues of the Turing Test. We can go much earlier than the Mishnah to find examples. In Judges 12:5–6 (King James Version)

5 And the Gileadites took the passages of Jordan before the Ephraimites: and it was so, that when those Ephraimites which were escaped said, Let me go over; that the men of Gilead said unto him, Art thou an Ephraimite? If he said, Nay;

6 Then said they unto him, Say now Shibboleth: and he said Sibboleth: for he could not frame to pronounce it right. Then they took him, and slew him at the passages of Jordan: and there fell at that time of the Ephraimites forty and two thousand.

The Gileadites use verbal indistinguishability (of the pronounciation of the original shibboleth) to unmask the Ephraimites. But they aren’t executing a Turing Test. They aren’t testing for thinking but rather for membership in a warring group.

What is Rabbi Zeira testing for? I’m no Talmudic scholar, so I defer to the experts. My understanding is that the Golem’s lack of language indicated not its own deficiency per se, but the deficiency of its creators. The Golem is imperfect in not using language, a sure sign that it was created by pietistic kabbalists who themselves are without sufficient purity.

Talmudic scholars note that the deficiency the Golem exhibits is intrinsically tied to the method by which the Golem is created: language. The kabbalistic incantations that ostensibly vivify the Golem were generated by mathematical combinations of the letters of the Hebrew alphabet. Contemporaneous understanding of the Golem’s lack of speech was connected to this completely formal method of kabbalistic letter magic: “The silent Golem is, prima facie, a foil to the recitations involved in the process of his creation.” (Idel, 1990, pages 264–5) The imperfection demonstrated by the Golem’s lack of language is not its inability to think, but its inability to wield the powers of language manifest in Torah, in prayer, in the creative power of the kabbalist incantations that gave rise to the Golem itself.

Only much later does interpretation start connecting language use in the Golem to soul, that is, to an internal flaw: “However, in the medieval period, the absence of speech is related to what was conceived then to be the highest human faculty: reason according to some writers, or the highest spirit, Neshamah, according to others.” (Idel, 1990, page 266, emphasis added)

By the 17th century, the time was ripe for consideration of whether nonhumans had a rational soul, and how one could tell. Descartes’s observations on the special role of language then serve as the true precursor to the Turing Test. Unlike the sole Talmudic reference, Descartes discusses the connection between language and thinking in detail and in several places — the Discourse on the Method, the Letter to the Marquess of Newcastle — and his followers — Cordemoy, La Mettrie — pick up on it as well. By Turing’s time, it is a natural notion, and one that Turing operationalizes for the first time in his Test.

The test of the Golem in the Sanhedrin story differs from the Turing Test in several ways. There is no discussion that the quality of language use was important (merely its existence), no mention of indistinguishability of language use (but Descartes didn’t either), and certainly no consideration of Turing’s idea of blinded controls. But the real point is that at heart the Golem test was not originally a test for the intelligence of the Golem at all, but of the purity of its creators.

References

Idel, Moshe. 1990. Golem: Jewish magical and mystical traditions on the artificial anthropoid, Albany, N.Y.: State University of New York Press.

Britain apologizes for treatment of Alan Turing

September 13th, 2009

- Image by Whimsical Chris via Flickr

Prime Minister Gordon Brown has apologized on behalf of the British government for the appalling treatment of Alan Turing, who was obliged to undergo chemical castration for the crime of being gay. Prime Minister Brown’s statement in the Telegraph follows an online petition drive that enlisted over 30,000 British citizens and residents, and a follow-on global petition with over 10,000 signatories worldwide.

Much has been made in the discussions surrounding the petition efforts and in the Prime Minister’s statement of Turing’s code-breaking efforts at Bletchley Park, which directly contributed to the allied victory in World War II. Less mentioned, but also central to his legacy, are Turing’s seminal contributions to computer science. It is no exaggeration to say that Alan Turing was the progenitor of computer science, in his brief career providing building the foundation of theory, hardware, systems, artificial intelligence, even computational biology. His death at 42 as a result of the British government’s misguided “therapy” constitutes one of the great intellectual tragedies of the twentieth century. I commend Prime Minister Brown for his prompt and complete apology.

“Don’t ask, don’t tell” rights retention for scholarly articles

June 18th, 2009

A strange social contract has arisen in the scholarly publishing field, a kind of “don’t ask, don’t tell” approach to online distribution of articles by authors. Publishers officially forbid online distribution, authors do it anyway without telling the publishers, and publishers don’t ask them to stop even though it violates contractual obligations. What happens when you refuse to play that game? Read on.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=c7b7195f-016a-4bbb-9936-d75ce06ff5a3)