Moderating principles

July 25th, 2022

Some time around April 1994, I founded the Computation and Language E-Print Archive, the first preprint repository for a subfield of computer science. It was hosted on Paul Ginsparg’s arXiv platform, which at the time had been hosting only physics papers, built out from the original arXiv repository for high-energy physics theory, hep-th. The repository, cmp-lg (as it was then called), was superseded in 1999 by an open-access preprint repository for all of computer science, the Computing Research Repository (CoRR), which covered a broad range of subject areas, including computation and language. The CoRR organizing committee also decided to host CoRR on arXiv. I switched over to moderating for the CoRR repository from cmp-lg, and have continued to do so for the last – oh my god – 22 years.[1]

Articles in the arXiv are classified with a single primary subject class, and may have other subject classes as secondary. The switchover folded cmp-lg into the arXiv as articles tagged with the cs.CL (computation and language) subject class. I thus became the moderator for cs.CL.

A preprint repository like the arXiv is not a journal. There is no peer review applied to articles. There is essentially no quality control. That is not the role of a preprint repository. The role of a preprint repository is open distribution, not vetting. Nonetheless, some kind of control is needed in making sure that, at the very least, the documents being submitted are in fact scholarly articles and are appropriately tagged as to subfield, and that need has expanded with the dramatic increase in submissions to CoRR over the years. The primary duty of a moderator is to perform this vetting and triage: verifying that a submission possesses the minimum standards for being characterized as a scholarly article, and that it falls within the purview of, say, cs.CL, as a primary or secondary subject class.

I am (along with the other arXiv moderators) thus regularly in the position of having to make decisions as to whether a document is a scholarly article or not. To a large extent, Justice Potter Stewart’s approach works reasonably well for scholarly articles: you know them when you see them. But over time, as more marginal cases come up, I’ve felt that tracking my thinking on the matter would be useful for maintaining consistency in my own practice. And now that I’ve done that for a while, I thought it might be useful to share my approach more broadly. That is the goal of this post.

The following thus constitutes (some of) the de facto policies that I use in making decisions as the moderator for the cs.CL collection in the CoRR part of the arXiv repository. I emphasize that these are my policies, not those of CoRR or the moderators of other CoRR subjects. (The arXiv folks themselves provide a more general guide for arXiv moderators.) Read the rest of this entry »

Whence function notation?

September 28th, 2015

I begin — in continental style, unmotivated and, frankly, gratuitously — by defining Ackerman’s function \(A\) over two integers:

\[ A(m, n) = \left\{ \begin{array}{l}

n + 1 & \mbox{ if $m=0$ } \\

A(m-1, 1) & \mbox{ if $m > 0$ and $n = 0$ } \\

A(m-1, A(m, n-1)) & \mbox{ if $m > 0$ and $n > 0$ }

\end{array} \right. \]

|

| …drawing their equations evanescently in dust and sand… Image of “Death of Archimedes” from Charles F. Horne, editor, Great Men and Famous Women, Volume 3, 1894. Reproduced by Project Gutenberg. Used by permission. |

You’ll have appreciated (unconsciously no doubt) that this definition makes repeated use of a notation in which a symbol precedes a parenthesized list of expressions, as for example \(f(a, b, c)\). This configuration represents the application of a function to its arguments. But you knew that. And why? Because everyone who has ever gotten through eighth grade math has been taught this notation. It is inescapable in high school algebra textbooks. It is a standard notation in the most widely used programming languages. It is the very archetype of common mathematical knowledge. It is, for God’s sake, in the Common Core. It is to mathematicians as water is to fish — so encompassing as to be invisible.

Something so widespread, so familiar — it’s hard to imagine how it could be otherwise. It’s difficult to un-see it as anything but function application. But it was not always thus. Someone must have invented this notation, some time in the deep past. Perhaps it came into being when mathematicians were still drawing their equations evanescently in dust and sand. Perhaps all record has been lost of that ur-application that engendered all later function application expressions. Nonetheless, someone must have come up with the idea.

|

| …that ur-application… Photo from the author. |

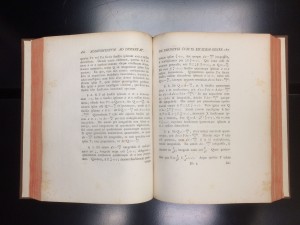

Surprisingly, the origins of the notation are not shrouded in mystery. The careful and exhaustive scholarship of mathematical historian Florian Cajori (1929, page 267) argues for a particular instance as originating the use of this now ubiquitous notation. Leonhard Euler, the legendary mathematician and perhaps the greatest innovator in successful mathematical notations, proposed the notation first in 1734, in Section 7 of his paper “Additamentum ad Dissertationem de Infinitis Curvis Eiusdem Generis” [“An Addition to the Dissertation Concerning an Infinite Number of Curves of the Same Kind”].

The paper was published in 1740 in Commentarii Academiae Scientarium Imperialis Petropolitanae [Memoirs of the Imperial Academy of Sciences in St. Petersburg], Volume VII, covering the years 1734-35. A visit to the Widener Library stacks produced a copy of the volume, letterpress printed on crisp rag paper, from which I took the image shown above of the notational innovation.

Here is the pertinent sentence (with translation by Ian Bruce.):

Quocirca, si \(f\left(\frac{x}{a} +c\right)\) denotet functionem quamcunque ipsius \(\frac{x}{a} +c\) fiet quoque \(dx − \frac{x\, da}{a}\) integrabile, si multiplicetur per \(\frac{1}{a} f\left(\frac{x}{a} + c\right)\).

[On account of which, if \(f\left(\frac{x}{a} +c\right)\) denotes some function of \(\frac{x}{a} +c\), it also makes \(dx − \frac{x\, da}{a}\) integrable, if it is multiplied by \(\frac{1}{a} f\left(\frac{x}{a} + c\right)\).]

There is the function symbol — the archetypal \(f\), even then, to evoke the concept of function — followed by its argument corralled within simple curves to make clear its extent.

It’s seductive to think that there is an inevitability to the notation, but this is an illusion, following from habit. There are alternatives. Leibniz for instance used a boxy square-root-like diacritic over the arguments, with numbers to pick out the function being applied: \( \overline{a; b; c\,} \! | \! \lower .25ex {\underline{\,{}^1\,}} \! | \), and even Euler, in other later work, experimented with interposing a colon between the function and its arguments: \(f : (a, b, c)\). In the computing world, “reverse Polish” notation, found on HP calculators and the programming languages Forth and Postscript, has the function symbol following its arguments: \(a\,b\,c\,f\), whereas the quintessential functional programming language Lisp parenthesizes the function and its arguments: \((f\ a\ b\ c)\).

Finally, ML and its dialects follow Church’s lambda calculus in merely concatenating the function and its (single) argument — \(f \, a\) — using parentheses only to disambiguate structure. But even here, Euler’s notation stands its ground, for the single argument of a function might itself have components, a ‘tuple’ of items \(a\), \(b\), and \(c\) perhaps. The tuples might be indicated using an infix comma operator, thus \(a,b,c\). Application of a function to a single tuple argument can then mimic functions of multiple arguments, for instance, \(f (a, b, c)\) — the parentheses required by the low precedence of the tuple forming operator — and we are back once again to Euler’s notation. Clever, no? Do you see the lengths to which people will go to adhere to Euler’s invention? As much as we might try new notational ideas, this one has staying power.

References

Florian Cajori. 1929. A History of Mathematical Notations, Volume II. Chicago: Open Court Publishing Company.

Leonhard Euler. 1734. Additamentum ad Dissertationem de Infinitis Curvis Eiusdem Generis. In Commentarii Academiae Scientarium Imperialis Petropolitanae, Volume VII (1734–35), pages 184–202, 1740.

Inaccessible writing, in both senses of the term

September 29th, 2014

My colleague Steven Pinker has a nice piece up at the Chronicle of Higher Education on “Why Academics Stink at Writing”, accompanying the recent release of his new book The Sense of Style: The Thinking Person’s Guide to Writing in the 21st Century, which I’m awaiting my pre-ordered copy of. The last sentence of the Chronicle piece summarizes well:

In writing badly, we are wasting each other’s time, sowing confusion and error, and turning our profession into a laughingstock.

The essay provides a diagnosis of many of the common symptoms of fetid academic writing. He lists metadiscourse, professional narcissism, apologizing, shudder quotes, hedging, metaconcepts and nominalizations. It’s not breaking new ground, but these problems well deserve review.

I fall afoul of these myself, of course. (Nasty truth: I’ve used “inter alia” all too often, inter alia.) But one issue I disagree with Pinker on is the particular style of metadiscourse he condemns that provides a roadmap of a paper. Here’s an example from a recent paper of mine.

After some preliminaries (Section 2), we present a set of known results relating context-free languages, tree homomorphisms, tree automata, and tree transducers to extend them for the tree-adjoining languages (Section 3), presenting these in terms of restricted kinds of functional programs over trees, using a simple grammatical notation for describing the programs. We review the definition of tree-substitution and tree-adjoining grammars (Section 4) and synchronous versions thereof (Section 5). We prove the equivalence between STSG and a variety of bimorphism (Section 6).

This certainly smacks of the first metadiscourse example Pinker provides:

“The preceding discussion introduced the problem of academese, summarized the principle theories, and suggested a new analysis based on a theory of Turner and Thomas. The rest of this article is organized as follows. The first section consists of a review of the major shortcomings of academic prose. …”

Who needs that sort of signposting in a 6,000-word essay? But in the context of a 50-page article, giving a kind of table of contents such as this doesn’t seem out of line. Much of the metadiscourse that Pinker excoriates is unneeded, but appropriate advance signposting can ease the job of the reader considerably. Sometimes, as in the other examples Pinker gives, “metadiscourse is there to help the writer, not the reader, since she has to put more work into understanding the signposts than she saves in seeing what they point to.” But anything that helps the reader to understand the high-level structure of an object as complex as a long article seems like a good thing to me.

The penultimate sentence of Pinker’s piece places poor academic writing in context:

Our indifference to how we share the fruits of our intellectual labors is a betrayal of our calling to enhance the spread of knowledge.

That sentiment applies equally well – arguably more so – to the venues where we publish. By placing our articles in journals that lock up access tightly we are also betraying our calling. And it doesn’t matter how good the writing is if it can’t be read in the first place.

Switching to Markdown for scholarly article production

August 29th, 2014

With few exceptions, scholars would be better off writing their papers in a lightweight markup format called Markdown, rather than using a word-processing program like Microsoft Word. This post explains why, and reveals a hidden agenda as well.1

Microsoft Word is not appropriate for scholarly article production

|

| …lightweight… “Old two pan balance” image from Nikodem Nijaki at Wikimedia Commons. Used by permission. |

Before turning to lightweight markup, I review the problems with Microsoft Word as the lingua franca for producing scholarly articles. This ground has been heavily covered. (Here’s a recent example.) The problems include:

- Substantial learning curve

- Microsoft Word is a complicated program that is difficult to use well.

- Appearance versus structure

- Word-processing programs like Word conflate composition with typesetting. They work by having you specify how a document should look, not how it is structured. A classic example is section headings. In a typical markup language, you specify that something is a heading by marking it as a heading. In a word-processing program you might specify that something is a heading by increasing the font size and making it bold. Yes, Word has “paragraph styles”, and some people sometimes use them more or less properly, if you can figure out how. But most people don’t, or don’t do so consistently, and the resultant chaos has been well documented. It has led to a whole industry of people who specialize in massaging Word files into some semblance of consistency.

- Backwards compatibility

- Word-processing program file formats have a tendency to change. Word itself has gone through multiple incompatible file formats in the last decades, one every couple of years. Over time, you have to keep up with the latest version of the software to do anything at all with a new document, but updating your software may well mean that old documents are no longer identically rendered. With Markdown, no software is necessary to read documents. They are just plain text files with relatively intuitive markings, and the underlying file format (UTF-8 née ASCII) is backward compatible to 1963. Further, typesetting documents in Markdown to get the “nice” version is based on free and open-source software (markdown, pandoc) and built on other longstanding open source standards (LaTeX, BibTeX).

- Poor typesetting

- Microsoft Word does a generally poor job of typesetting, as exemplified by hyphenation, kerning, mathematical typesetting. This shouldn’t be surprising, since the whole premise of a word-processing program means that the same interface must handle both the specification and typesetting in real-time, a recipe for having to make compromises.

- Lock-in

- Because Microsoft Word’s file format is effectively proprietary, users are locked in to a single software provider for any and all functionality. The file formats are so complicated that alternative implementations are effectively impossible.

Lightweight markup is the solution

The solution is to use a markup format that allows specification of the document (providing its logical structure) separate from the typesetting of that document. Your document is specified – that is, generated and stored – as straight text. Any formatting issues are handled not by changing the formatting directly via a graphical user interface but by specifying the formatting textually using a specific textual notation. For instance, in the HTML markup language, a word or phrase that should be emphasized is textually indicated by surrounding it with <em>…</em>. HTML and other powerful markup formats like LaTeX and various XML formats carry relatively large overheads. They are complex to learn and difficult to read. (Typing raw XML is nobody’s idea of fun.) Ideally, we would want a markup format to be lightweight, that is, simple, portable, and human-readable even in its raw state.

Markdown is just such a lightweight markup language. In Markdown, emphasis is textually indicated by surrounding the phrase with asterisks, as is familiar from email conventions, for example, *lightweight*. See, that wasn’t so hard. Here’s another example: A bulleted list is indicated by prepending each item on a separate line with an asterisk, like this:

* First item

* Second itemwhich specifies the list

- First item

- Second item

Because specification and typesetting are separated, software is needed to convert from one to the other, to typeset the specified document. For reasons that will become clear later, I recommend the open-source software pandoc. Generally, scholars will want to convert their documents to PDF (though pandoc can convert to a huge variety of other formats). To convert file.md (the Markdown-format specification file) to PDF, the command

pandoc file.md -o file.pdfsuffices. Alternatively, there are many editing programs that allow entering, editing, and typesetting Markdown. I sometimes use Byword. In fact, I’m using it now.

Markup languages range from the simple to the complex. I argue for Markdown for four reasons:

- Basic Markdown, sufficient for the vast majority of non-mathematical scholarly writing, is dead simple to learn and remember, because the markup notations were designed to mimic the kinds of textual conventions that people are used to – asterisks for emphasis and for indicating bulleted items, for instance. The coverage of this basic part of Markdown includes: emphasis, section structure, block quotes, bulleted and numbered lists, simple tables, and footnotes.

- Markdown is designed to be readable and the specified format understandable even in its plain text form, unlike heavier weight markup languages such as HTML.

- Markdown is well supported by a large ecology of software systems for entering, previewing, converting, typesetting, and collaboratively editing documents.

- Simple things are simple. More complicated things are more complicated, but not impossible. The extensions to Markdown provided by pandoc cover more or less the rest of what anyone might need for scholarly documents, including links, cross-references, figures, citations and bibliographies (via BibTeX), mathematical typesetting (via LaTeX), and much more.For instance, this equation (the Cauchy-Schwarz inequality) will typeset well in generated PDF files, and even in HTML pages using the wonderful MathJax library.\[ \left( \sum_{k=1}^n a_k b_k \right)^2 \leq \left( \sum_{k=1}^n a_k^2 \right) \left( \sum_{k=1}^n b_k^2 \right) \](Pandoc also provides some extensions that simplify and extend the basic Markdown in quite nice ways, for instance, definition lists, strikeout text, a simpler notation for tables.)

Above, I claimed that scholars should use Markdown “with few exceptions”. The exceptions are:

- The document requires nontrivial mathematical typesetting. In that case, you’re probably better off using LaTeX. Anyone writing a lot of mathematics has given up word processors long ago and ought to know LaTeX anyway. Still, I’ll often do a first draft in Markdown with LaTeX for the math-y bits. Pandoc allows LaTeX to be included within a Markdown file (as I’ve done above), and preserves the LaTeX markup when converting the Markdown to LaTeX. From there, it can be typeset with LaTeX. Microsoft Word would certainly not be appropriate for this case.

- The document requires typesetting with highly refined or specialized aspects. I’d probably go with LaTeX here too, though desktop publishing software (InDesign) is also appropriate if there’s little or no mathematical typesetting required. Microsoft Word would not be appropriate for this case either.

Some have proposed that we need a special lightweight markup language for scholars. But Markdown is sufficiently close, and has such a strong community of support and software infrastructure, that it is more than sufficient for the time being. Further development would of course be helpful, so long as the urge to add “features” doesn’t overwhelm its core simplicity.

The hidden agenda

I have a hidden agenda. Markdown is sufficient for the bulk of cases of composing scholarly articles, and simple enough to learn that academics might actually use it. Markdown documents are also typesettable according to a separate specification of document style, and retargetable to multiple output formats (PDF, HTML, etc.).2 Thus, Markdown could be used as the production file format for scholarly journals, which would eliminate the need for converting between the authors’ manuscript version and the publishers internal format, with all the concomitant errors that process is prone to produce.

In computer science, we have by now moved almost completely to a system in which authors provide articles in LaTeX so that no retyping or recomposition of the articles needs to be done for the publisher’s typesetting system. Publishers just apply their LaTeX style files to our articles. The result has been a dramatic improvement in correctness and efficiency. (It is in part due to such an efficient production process that the cost of running a high-end computer science journal can be so astoundingly low.)

Even better, there is a new breed of collaborative web-based document editing tools being developed that use Markdown as their core file format, tools like Draft and Authorea. They provide multi-author editing, versioning, version comparison, and merging. These tools could constitute the system by which scholarly articles are written, collaborated on, revised, copyedited, and moved to the journal production process, generating efficiencies for a huge range of journals, efficiencies that we’ve enjoyed in computer science and mathematics for years.

As Rob Walsh of ScholasticaHQ says, “One of the biggest bottlenecks in Open Access publishing is typesetting. It shouldn’t be.” A production ecology built around Markdown could be the solution.

- Many of the ideas in this post are not new. Complaints about WYSIWYG word-processing programs have a long history. Here’s a particularly trenchant diatribe pointing out the superiority of disentangling composition from typesetting. The idea of “scholarly Markdown” as the solution is also not new. See this post or this one for similar proposals. I go further in viewing certain current versions of Markdown (as implemented in Pandoc) as practical already for scholarly article production purposes, though I support coordinated efforts that could lead to improved lightweight markup formats for scholarly applications. Update September 22, 2014: I’ve just noticed a post by Dennis Tenen and Grant Wythoff at The Programming Historian on “Sustainable Authorship in Plain Text using Pandoc and Markdown” giving a tutorial on using these tools for writing scholarly history articles.↩

- As an example, I’ve used this very blog post. Starting with the Markdown source file (which I’ve attached to this post), I first generated HTML output for copying into the blog using the command

pandoc -S --mathjax --base-header-level=3 markdownpost.md -o markdownpost.htmlA nicely typeset version using the American Mathematical Society’s journal article document style can be generated with

pandoc markdownpost.md -V documentclass:amsart -o markdownpost-amsart.pdfTo target the style of ACM transactions instead, the following command suffices:

pandoc markdownpost.md -V documentclass:acmsmall -o markdownpost-acmsmall.pdfBoth PDF versions are also attached to this post.↩

Attachments - mardownpost.md: The source file for this post in Markdown format

- markdownpost-amsart.pdf: The post rendered using pandoc according to AMS journal style

- markdownpost-acmsmall.pdf: The post rendered using pandoc according to ACM journal style

Thoughts on founding open-access journals

November 21st, 2013

|

| … altogether too much concern with the contents of the journal’s spine text… “reference” image by flickr user Sara S. used by permission. |

Precipitated by a recent request to review some proposals for new open-access journals, I spent some time gathering my own admittedly idiosyncratic thoughts on some of the issues that should be considered when founding new open-access journals. I make them available here. Good sources for more comprehensive information on launching and operating open-access journals are SPARC’s open-access journal publishing resource index and the Open Access Directories guides for OA journal publishers.

Unlike most of my posts, I may augment this post over time, and will do so without explicit marking of the changes. Your thoughts on additions to the topics below—via comments or email—are appreciated. A version number (currently version 1.0) will track the changes for reference.

It is better to flip a journal than to found one

The world has enough journals. Adding new open-access journals as alternatives to existing ones may be useful if there are significant numbers of high quality articles being generated in a field for which there is no reasonable open-access venue for publication. Such cases are quite rare, especially given the rise of open-access “megajournals” covering the sciences (PLoS ONE, Scientific Reports, AIP Advances, SpringerPlus, etc.), and the social sciences and humanities (SAGE Open). Where there are already adequate open-access venues (even if no one journal is “perfect” for the field), scarce resources are probably better spent elsewhere, especially on flipping journals from closed to open access.

Admittedly, the world does not have enough open-access journals (at least high-quality ones). So if it is not possible to flip a journal, founding a new one may be a reasonable fallback position, but it is definitely the inferior alternative.

Licensing should be by CC-BY

As long as you’re founding a new journal, its contents should be as open as possible consistent with appropriate attribution. That exactly characterizes the CC-BY license. It’s also a tremendously simple approach. Once the author grants a CC-BY license, no further rights need be granted to the publisher. There’s no need for talk about granting the publisher a nonexclusive license to publish the article, etc., etc. The CC-BY license already allows the publisher to do so. There’s no need to talk about what rights the author retains, since the author retains all rights subject to the nonexclusive CC-BY license. I’ve made the case for a CC-BY license at length elsewhere.

It’s all about the editorial board

The main product that a journal is selling is its reputation. A new journal with no track record needs high quality submissions to bootstrap that reputation, and at the start, nothing is more convincing to authors to submit high quality work to the journal than its editorial board. Getting high-profile names somewhere on the masthead at the time of the official launch is the most important thing for the journal to do. (“We can add more people later” is a risky proposition. You may not get a second chance to make a first impression.)

Getting high-profile names on your board may occur naturally if you use the expedient of flipping an existing closed-access journal, thereby stealing the board, which also has the benefit of acquiring the journal’s previous reputation and eliminating one more subscription journal.

Another good idea for jumpstarting a journal’s reputation is to prime the article pipeline by inviting leaders in the field to submit their best articles to the journal before its official launch, so that the journal announcement can provide information on forthcoming articles by luminaries.

Follow ethical standards

Adherence to the codes of conduct of the Open Access Scholarly Publishers Association (OASPA) and the Committee on Publication Ethics (COPE) should be fundamental. Membership in the organizations is recommended; the fees are extremely reasonable.

You can outsource the process

There is a lot of interest among certain institutions to found new open-access journals, institutions that may have no particular special expertise in operating journals. A good solution is to outsource the operation of the journal to an organization that does have special expertise, namely, a journal publisher. There are several such publishers who have experience running open-access journals effectively and efficiently. Some are exclusively open-access publishers, for example, Co-Action Publishing, Hindawi Publishing, Ubiquity Press. Others handle both open- and closed-access journals: HighWire Press, Oxford University Press, ScholasticaHQ, Springer/BioMed Central, Wiley. This is not intended as a complete listing (the Open Access Directory has a complementary offering), nor in any sense an endorsement of any of these organizations, just a comment that shopping the journal around to a publishing partner may be a good idea. Especially given the economies of scale that exist in journal publishing, an open-access publishing partner may allow the journal to operate much more economically than having to establish a whole organization in-house.

Certain functionality should be considered a baseline

Geoffrey Pullum, in his immensely satisfying essays “Stalking the Perfect Journal” and “Seven Deadly Sins in Journal Publishing”, lists his personal criteria in journal design. They are a good starting point, but need updating for the era of online distribution. (There is altogether too much concern with the contents of the journal’s spine text for instance.)

- Reviewing should be anonymous (with regard to the reviewers) and blind (with regard to the authors), except where a commanding argument can be given for experimenting with alternatives.

- Every article should be preserved in one (or better, more than one) preservation system. CLOCKSS, Portico1, a university or institutional archival digital repository are good options.

- Every article should have complete bibliographic metadata on the first page, including license information (a simple reference to CC-BY; see above), and (as per Pullum) first and last page numbers.

- The journal should provide DOIs for its articles. OASPA membership is an inexpensive way to acquire the ability to assign DOIs. An article’s DOI should be included in the bibliographic metadata on the first page.

There’s additional functionality beyond this baseline that would be ideal, though the tradeoff against the additional effort required would have to be evaluated.

- Provide article-level metrics, especially download statistics, though other “altmetrics” may be helpful.

- Provide access to the articles in multiple formats in addition to PDF: HTML, XML with the NLM DTD.

- Provide the option for readers to receive alerts of new content through emails and RSS feeds.

- Encourage authors to provide the underlying data to be distributed openly as well, and provide the infrastructure for them to do so.

Take advantage of the networked digital era

Many journal publishing conventions of long standing are no longer well motivated in the modern era. Here are a few examples. They are not meant to be exhaustive. You can probably think of others. The point is that certain standard ideas can and should be rethought.

- There is no longer any need for “issues” of journals. Each article should be published as soon as it is finished, no later and no sooner. If you’d like, an “issue” number can be assigned that is incremented for each article. (Volumes, incremented annually, are still necessary because many aspects of the scholarly publishing and library infrastructure make use of them. They are also useful for the purpose of characterizing a bolus of content for storage and preservation purposes.)

- Endnotes, a relic of the day when typesetting was a complicated and fraught process that was eased by a human being not having to determine how much space to leave at the bottom of a page for footnotes, should be permanently retired. Footnotes are far easier for readers (which is the whole point really), and computers do the drudgery of calculating the space for them.

- Page limits are silly. In the old physical journal days, page limits had two purposes. They were necessary because journal issues came in quanta of page signatures, and therefore had fundamental physical limits to the number of pages that could be included. A network-distributed journal no longer has this problem. Page limits also serve the purpose of constraining the author to write relatively succinctly, easing the burden on reviewer and (eventually) reader. But for this purpose the page is not a robust unit of measurement of the constrained resource, the reviewers’ and the readers’ attention. One page can hold anything from a few hundred to a thousand or more words. If limits are to be required, they should be stated in appropriate units such as the number of words. The word count should not include figures, tables, or bibliography, as they impinge on readers’ attention in a qualitatively different way.

- Author-date citation is far superior to numeric citation in every way except for the amount of space and ink required. Now that digital documents use no physical space or ink, there is no longer an excuse for numeric citations. Similarly, ibid. and op. cit. should be permanently retired. I appreciate that different fields have different conventions on these matters. That doesn’t change the fact that those fields that have settled on numeric citations or ibidded footnotes are on the wrong side of technological history.

- Extensive worry about and investment in fancy navigation within and among the journal’s articles is likely to be a waste of time, effort, and resources. To first approximation, all accesses to articles in the journal will come from sites higher up in the web food chain—the Google’s and Bing’s, the BASE’s and OAIster’s of the world. Functionality that simplifies navigation among articles across the whole scholarly literature (cross-linked DOIs in bibliographies, for instance, or linked open metadata of various sorts) is a different matter.

Think twice

In the end, think long and hard about whether founding a new open-access journal is the best use of your time and your institution’s resources in furthering the goals of open scholarly communication. Operating a journal is not free, in cash and in time. Perhaps a better use of resources is making sure that the academic institutions and funders are set up to underwrite the existing open-access journals in the optimal way. But if it’s the right thing to do, do it right.

- A caveat on Portico’s journal preservation service: The service is designed to release its stored articles when a “trigger event” occurs, for instance, if the publisher ceases operations. Unfortunately, Portico doesn’t release the journal contents openly, but only to its library participants, even for OA journals. However, if the articles were licensed under CC-BY, any participating library could presumably reissue them openly.↩

When practice and logic conflict, change the practice

January 3rd, 2013

|

| …our little tiff in the late 18th century…“NYC – Metropolitan Museum of Art: Washington Crossing the Delaware” image by flickr user wallyg. Used by permission. |

I’m shortly off to give a talk at the annual meeting of the Linguistic Society of America (on why open access is better for scholarly societies, which I’ll be blogging about soon), but in the meantime, a linguistically related post about punctuation.

Careful readers of this blog (are there any careful readers of this blog? are there any readers at all?) will note that I generally eschew the peculiarly American convention of moving punctuation within a closing quotation mark. Examples from The Occasional Pamphlet abound: here, here, here, here, here, here, here, and here. And that’s just from 2012. It’s surprising how often this punctuation convention comes into play.

Instead, I use the convention that only the stuff being quoted is put within the quotation marks. This is sometimes called the “British” convention, despite the fact that other nationalities use it as well, presumably to emphasize the American/British dualism extant from our little tiff in the late 18th century. I use the “British” convention because the “American” convention is, in technical terms, stupid.

The story goes that punctuation appearing within the quotation mark is more aesthetically pleasing than punctuation outside the quotation mark. But even if that were true, clarity trumps beauty. Moving the punctuation means that when you see a quoted string with some final punctuation, you don’t know if that punctuation is or is not intended to be part of the thing being quoted; it is systematically ambiguous.

Apparently, my view is highly controversial. For example, when working with MIT Press on my book on the Turing test, my copy editor (who, by the way, was wonderful, and amazingly patient) moved all my punctuation around to satisfy the American convention. I moved them all back. She moved them again. We got into a long discussion of the matter; it seems she had never confronted an author who felt strongly about punctuation before. (I presume she had never copy-edited Geoff Pullum, from whom more later.) As a compromise, we left the punctuation the way I liked it—mostly—but she made me add the following prefatory editorial note:

Throughout the text, the American convention of moving punctuation within closing quotation marks (whether or not the punctuation is part of what is being referred to) is dropped in favor of the more logical and consistent convention of placing only the quoted material within the marks.

I would now go on to explain why the “British” convention is better than the “stupid” convention, except that Geoff Pullum has done so much better a job, far better than I ever could. Here is an excerpt from his essay “Punctuation and human freedom” published in Natural Language and Linguistic Theory and reproduced in his book The Great Eskimo Vocabulary Hoax. I recommend the entire essay to you.

I want you to first consider the string ‘the string’ and the string ‘the string.’, noting that it takes ten keystrokes to type the string in the first set of quotes, and eleven to type the string in the second pair. Imagine you wanted to quote me on the latter point. You might want to say (1).

(1) Pullum notes that it takes eleven keystrokes to type the string ‘the string.’

No problem there; (1) is true (and grammatical if we add a final period). But now suppose you want to say this:

(2) Pullum notes that it takes ten keystrokes to type the string ‘the string’.

You won’t be able to publish it. Your copy-editor will change it before the first proof stage to (3), which is false (though regarded by copy-editors as grammatical):

(3) Pullum notes that it takes ten keystrokes to type the string ‘the string.’

Why? Because the copy-editor will insist that when a sentence ends with a quotation, the closing quotation mark must follow the punctuation mark.

I say this must stop. Linguists have a duty to the public to use their expertise in arguing for changes to the fabric of society when its interests are threatened. And we have such a situation here.

What say we all switch over to the logical quotation punctuation approach and save the fabric of society, shall we?