Moderating principles

July 25th, 2022

Some time around April 1994, I founded the Computation and Language E-Print Archive, the first preprint repository for a subfield of computer science. It was hosted on Paul Ginsparg’s arXiv platform, which at the time had been hosting only physics papers, built out from the original arXiv repository for high-energy physics theory, hep-th. The repository, cmp-lg (as it was then called), was superseded in 1999 by an open-access preprint repository for all of computer science, the Computing Research Repository (CoRR), which covered a broad range of subject areas, including computation and language. The CoRR organizing committee also decided to host CoRR on arXiv. I switched over to moderating for the CoRR repository from cmp-lg, and have continued to do so for the last – oh my god – 22 years.[1]

Articles in the arXiv are classified with a single primary subject class, and may have other subject classes as secondary. The switchover folded cmp-lg into the arXiv as articles tagged with the cs.CL (computation and language) subject class. I thus became the moderator for cs.CL.

A preprint repository like the arXiv is not a journal. There is no peer review applied to articles. There is essentially no quality control. That is not the role of a preprint repository. The role of a preprint repository is open distribution, not vetting. Nonetheless, some kind of control is needed in making sure that, at the very least, the documents being submitted are in fact scholarly articles and are appropriately tagged as to subfield, and that need has expanded with the dramatic increase in submissions to CoRR over the years. The primary duty of a moderator is to perform this vetting and triage: verifying that a submission possesses the minimum standards for being characterized as a scholarly article, and that it falls within the purview of, say, cs.CL, as a primary or secondary subject class.

I am (along with the other arXiv moderators) thus regularly in the position of having to make decisions as to whether a document is a scholarly article or not. To a large extent, Justice Potter Stewart’s approach works reasonably well for scholarly articles: you know them when you see them. But over time, as more marginal cases come up, I’ve felt that tracking my thinking on the matter would be useful for maintaining consistency in my own practice. And now that I’ve done that for a while, I thought it might be useful to share my approach more broadly. That is the goal of this post.

The following thus constitutes (some of) the de facto policies that I use in making decisions as the moderator for the cs.CL collection in the CoRR part of the arXiv repository. I emphasize that these are my policies, not those of CoRR or the moderators of other CoRR subjects. (The arXiv folks themselves provide a more general guide for arXiv moderators.) Read the rest of this entry »

Upcoming in Tromsø

November 11th, 2015

|

| Northern lights over Tromsø |

I’ll be visiting Tromsø, Norway to attend the Tenth Annual Munin Conference on Scholarly Publishing, which is being held November 30 to December 1. I’m looking forward to the talks, including keynotes from Randy Schekman and Sabine Hossenfelder and an interview by Caroline Sutton of my colleague Peter Suber, director of Harvard’s Office for Scholarly Communication. My own keynote will be on “The role of higher education institutions in scholarly publishing and communication”. Here’s the abstract:

Institutions of higher education are in a double bind with respect to scholarly communication: On the one hand, they need to support the research needs of their students and researchers by providing access to the journals that comprise the archival record of scholarship. Doing so requires payment of substantial subscription fees. On the other hand, they need to provide the widest possible dissemination of works by those same researchers — the fruits of that very research — which itself incurs costs. I address how these two goals, each of which demands outlays of substantial funds, can best be honored. In the course of the discussion, I provide a first look at some new results on predicting journal usage, which allows for optimizing subscriptions.

Update: The video of my talk at the Munin conference is now available.

In support of behavioral tests of intelligence

May 7th, 2015

|

| …“blockhead” argument… “Blockhead by Paul McCarthy @ Tate Modern” image from flickr user Matt Hobbs. Used by permission. |

Alan Turing proposed what is the best known criterion for attributing intelligence, the capacity for thinking, to a computer. We call it the Turing Test, and it involves comparing the computer’s verbal behavior to that of people. If the two are indistinguishable, the computer passes the test. This might be cause for attributing intelligence to the computer.

Or not. The best argument against a behavioral test of intelligence (like the Turing Test) is that maybe the exhibited behaviors were just memorized. This is Ned Block’s “blockhead” argument in a nutshell. If the computer just had all its answers literally encoded in memory, then parroting those memorized answers is no sign of intelligence. And how are we to know from a behavioral test like the Turing Test that the computer isn’t just such a “memorizing machine”?

In my new(ish) paper, “There can be no Turing-Test–passing memorizing machines”, I address this argument directly. My conclusion can be found in the title of the article. By careful calculation of the information and communication capacity of space-time, I show that any memorizing machine could pass a Turing Test of no more than a few seconds, which is no Turing Test at all. Crucially, I make no assumptions beyond the brute laws of physics. (One distinction of the article is that it is one of the few philosophy articles in which a derivative is taken.)

The article is published in the open access journal Philosophers’ Imprint, and is available here along with code to computer-verify the calculations.

Inaccessible writing, in both senses of the term

September 29th, 2014

My colleague Steven Pinker has a nice piece up at the Chronicle of Higher Education on “Why Academics Stink at Writing”, accompanying the recent release of his new book The Sense of Style: The Thinking Person’s Guide to Writing in the 21st Century, which I’m awaiting my pre-ordered copy of. The last sentence of the Chronicle piece summarizes well:

In writing badly, we are wasting each other’s time, sowing confusion and error, and turning our profession into a laughingstock.

The essay provides a diagnosis of many of the common symptoms of fetid academic writing. He lists metadiscourse, professional narcissism, apologizing, shudder quotes, hedging, metaconcepts and nominalizations. It’s not breaking new ground, but these problems well deserve review.

I fall afoul of these myself, of course. (Nasty truth: I’ve used “inter alia” all too often, inter alia.) But one issue I disagree with Pinker on is the particular style of metadiscourse he condemns that provides a roadmap of a paper. Here’s an example from a recent paper of mine.

After some preliminaries (Section 2), we present a set of known results relating context-free languages, tree homomorphisms, tree automata, and tree transducers to extend them for the tree-adjoining languages (Section 3), presenting these in terms of restricted kinds of functional programs over trees, using a simple grammatical notation for describing the programs. We review the definition of tree-substitution and tree-adjoining grammars (Section 4) and synchronous versions thereof (Section 5). We prove the equivalence between STSG and a variety of bimorphism (Section 6).

This certainly smacks of the first metadiscourse example Pinker provides:

“The preceding discussion introduced the problem of academese, summarized the principle theories, and suggested a new analysis based on a theory of Turner and Thomas. The rest of this article is organized as follows. The first section consists of a review of the major shortcomings of academic prose. …”

Who needs that sort of signposting in a 6,000-word essay? But in the context of a 50-page article, giving a kind of table of contents such as this doesn’t seem out of line. Much of the metadiscourse that Pinker excoriates is unneeded, but appropriate advance signposting can ease the job of the reader considerably. Sometimes, as in the other examples Pinker gives, “metadiscourse is there to help the writer, not the reader, since she has to put more work into understanding the signposts than she saves in seeing what they point to.” But anything that helps the reader to understand the high-level structure of an object as complex as a long article seems like a good thing to me.

The penultimate sentence of Pinker’s piece places poor academic writing in context:

Our indifference to how we share the fruits of our intellectual labors is a betrayal of our calling to enhance the spread of knowledge.

That sentiment applies equally well – arguably more so – to the venues where we publish. By placing our articles in journals that lock up access tightly we are also betraying our calling. And it doesn’t matter how good the writing is if it can’t be read in the first place.

Switching to Markdown for scholarly article production

August 29th, 2014

With few exceptions, scholars would be better off writing their papers in a lightweight markup format called Markdown, rather than using a word-processing program like Microsoft Word. This post explains why, and reveals a hidden agenda as well.1

Microsoft Word is not appropriate for scholarly article production

|

| …lightweight… “Old two pan balance” image from Nikodem Nijaki at Wikimedia Commons. Used by permission. |

Before turning to lightweight markup, I review the problems with Microsoft Word as the lingua franca for producing scholarly articles. This ground has been heavily covered. (Here’s a recent example.) The problems include:

- Substantial learning curve

- Microsoft Word is a complicated program that is difficult to use well.

- Appearance versus structure

- Word-processing programs like Word conflate composition with typesetting. They work by having you specify how a document should look, not how it is structured. A classic example is section headings. In a typical markup language, you specify that something is a heading by marking it as a heading. In a word-processing program you might specify that something is a heading by increasing the font size and making it bold. Yes, Word has “paragraph styles”, and some people sometimes use them more or less properly, if you can figure out how. But most people don’t, or don’t do so consistently, and the resultant chaos has been well documented. It has led to a whole industry of people who specialize in massaging Word files into some semblance of consistency.

- Backwards compatibility

- Word-processing program file formats have a tendency to change. Word itself has gone through multiple incompatible file formats in the last decades, one every couple of years. Over time, you have to keep up with the latest version of the software to do anything at all with a new document, but updating your software may well mean that old documents are no longer identically rendered. With Markdown, no software is necessary to read documents. They are just plain text files with relatively intuitive markings, and the underlying file format (UTF-8 née ASCII) is backward compatible to 1963. Further, typesetting documents in Markdown to get the “nice” version is based on free and open-source software (markdown, pandoc) and built on other longstanding open source standards (LaTeX, BibTeX).

- Poor typesetting

- Microsoft Word does a generally poor job of typesetting, as exemplified by hyphenation, kerning, mathematical typesetting. This shouldn’t be surprising, since the whole premise of a word-processing program means that the same interface must handle both the specification and typesetting in real-time, a recipe for having to make compromises.

- Lock-in

- Because Microsoft Word’s file format is effectively proprietary, users are locked in to a single software provider for any and all functionality. The file formats are so complicated that alternative implementations are effectively impossible.

Lightweight markup is the solution

The solution is to use a markup format that allows specification of the document (providing its logical structure) separate from the typesetting of that document. Your document is specified – that is, generated and stored – as straight text. Any formatting issues are handled not by changing the formatting directly via a graphical user interface but by specifying the formatting textually using a specific textual notation. For instance, in the HTML markup language, a word or phrase that should be emphasized is textually indicated by surrounding it with <em>…</em>. HTML and other powerful markup formats like LaTeX and various XML formats carry relatively large overheads. They are complex to learn and difficult to read. (Typing raw XML is nobody’s idea of fun.) Ideally, we would want a markup format to be lightweight, that is, simple, portable, and human-readable even in its raw state.

Markdown is just such a lightweight markup language. In Markdown, emphasis is textually indicated by surrounding the phrase with asterisks, as is familiar from email conventions, for example, *lightweight*. See, that wasn’t so hard. Here’s another example: A bulleted list is indicated by prepending each item on a separate line with an asterisk, like this:

* First item

* Second itemwhich specifies the list

- First item

- Second item

Because specification and typesetting are separated, software is needed to convert from one to the other, to typeset the specified document. For reasons that will become clear later, I recommend the open-source software pandoc. Generally, scholars will want to convert their documents to PDF (though pandoc can convert to a huge variety of other formats). To convert file.md (the Markdown-format specification file) to PDF, the command

pandoc file.md -o file.pdfsuffices. Alternatively, there are many editing programs that allow entering, editing, and typesetting Markdown. I sometimes use Byword. In fact, I’m using it now.

Markup languages range from the simple to the complex. I argue for Markdown for four reasons:

- Basic Markdown, sufficient for the vast majority of non-mathematical scholarly writing, is dead simple to learn and remember, because the markup notations were designed to mimic the kinds of textual conventions that people are used to – asterisks for emphasis and for indicating bulleted items, for instance. The coverage of this basic part of Markdown includes: emphasis, section structure, block quotes, bulleted and numbered lists, simple tables, and footnotes.

- Markdown is designed to be readable and the specified format understandable even in its plain text form, unlike heavier weight markup languages such as HTML.

- Markdown is well supported by a large ecology of software systems for entering, previewing, converting, typesetting, and collaboratively editing documents.

- Simple things are simple. More complicated things are more complicated, but not impossible. The extensions to Markdown provided by pandoc cover more or less the rest of what anyone might need for scholarly documents, including links, cross-references, figures, citations and bibliographies (via BibTeX), mathematical typesetting (via LaTeX), and much more.For instance, this equation (the Cauchy-Schwarz inequality) will typeset well in generated PDF files, and even in HTML pages using the wonderful MathJax library.\[ \left( \sum_{k=1}^n a_k b_k \right)^2 \leq \left( \sum_{k=1}^n a_k^2 \right) \left( \sum_{k=1}^n b_k^2 \right) \](Pandoc also provides some extensions that simplify and extend the basic Markdown in quite nice ways, for instance, definition lists, strikeout text, a simpler notation for tables.)

Above, I claimed that scholars should use Markdown “with few exceptions”. The exceptions are:

- The document requires nontrivial mathematical typesetting. In that case, you’re probably better off using LaTeX. Anyone writing a lot of mathematics has given up word processors long ago and ought to know LaTeX anyway. Still, I’ll often do a first draft in Markdown with LaTeX for the math-y bits. Pandoc allows LaTeX to be included within a Markdown file (as I’ve done above), and preserves the LaTeX markup when converting the Markdown to LaTeX. From there, it can be typeset with LaTeX. Microsoft Word would certainly not be appropriate for this case.

- The document requires typesetting with highly refined or specialized aspects. I’d probably go with LaTeX here too, though desktop publishing software (InDesign) is also appropriate if there’s little or no mathematical typesetting required. Microsoft Word would not be appropriate for this case either.

Some have proposed that we need a special lightweight markup language for scholars. But Markdown is sufficiently close, and has such a strong community of support and software infrastructure, that it is more than sufficient for the time being. Further development would of course be helpful, so long as the urge to add “features” doesn’t overwhelm its core simplicity.

The hidden agenda

I have a hidden agenda. Markdown is sufficient for the bulk of cases of composing scholarly articles, and simple enough to learn that academics might actually use it. Markdown documents are also typesettable according to a separate specification of document style, and retargetable to multiple output formats (PDF, HTML, etc.).2 Thus, Markdown could be used as the production file format for scholarly journals, which would eliminate the need for converting between the authors’ manuscript version and the publishers internal format, with all the concomitant errors that process is prone to produce.

In computer science, we have by now moved almost completely to a system in which authors provide articles in LaTeX so that no retyping or recomposition of the articles needs to be done for the publisher’s typesetting system. Publishers just apply their LaTeX style files to our articles. The result has been a dramatic improvement in correctness and efficiency. (It is in part due to such an efficient production process that the cost of running a high-end computer science journal can be so astoundingly low.)

Even better, there is a new breed of collaborative web-based document editing tools being developed that use Markdown as their core file format, tools like Draft and Authorea. They provide multi-author editing, versioning, version comparison, and merging. These tools could constitute the system by which scholarly articles are written, collaborated on, revised, copyedited, and moved to the journal production process, generating efficiencies for a huge range of journals, efficiencies that we’ve enjoyed in computer science and mathematics for years.

As Rob Walsh of ScholasticaHQ says, “One of the biggest bottlenecks in Open Access publishing is typesetting. It shouldn’t be.” A production ecology built around Markdown could be the solution.

- Many of the ideas in this post are not new. Complaints about WYSIWYG word-processing programs have a long history. Here’s a particularly trenchant diatribe pointing out the superiority of disentangling composition from typesetting. The idea of “scholarly Markdown” as the solution is also not new. See this post or this one for similar proposals. I go further in viewing certain current versions of Markdown (as implemented in Pandoc) as practical already for scholarly article production purposes, though I support coordinated efforts that could lead to improved lightweight markup formats for scholarly applications. Update September 22, 2014: I’ve just noticed a post by Dennis Tenen and Grant Wythoff at The Programming Historian on “Sustainable Authorship in Plain Text using Pandoc and Markdown” giving a tutorial on using these tools for writing scholarly history articles.↩

- As an example, I’ve used this very blog post. Starting with the Markdown source file (which I’ve attached to this post), I first generated HTML output for copying into the blog using the command

pandoc -S --mathjax --base-header-level=3 markdownpost.md -o markdownpost.htmlA nicely typeset version using the American Mathematical Society’s journal article document style can be generated with

pandoc markdownpost.md -V documentclass:amsart -o markdownpost-amsart.pdfTo target the style of ACM transactions instead, the following command suffices:

pandoc markdownpost.md -V documentclass:acmsmall -o markdownpost-acmsmall.pdfBoth PDF versions are also attached to this post.↩

Attachments - mardownpost.md: The source file for this post in Markdown format

- markdownpost-amsart.pdf: The post rendered using pandoc according to AMS journal style

- markdownpost-acmsmall.pdf: The post rendered using pandoc according to ACM journal style

How universities can support open-access journal publishing

June 4th, 2014

To university administrators and librarians:

|

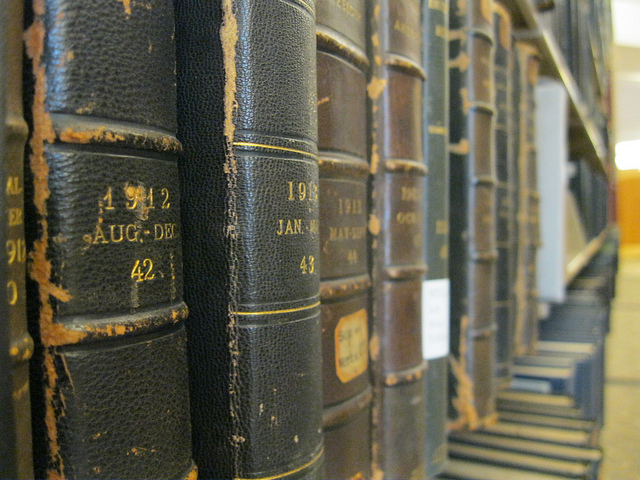

| …enablement becomes transformation… “Shelf of journals” image from Flickr user University of Illinois Library. Used by permission. |

As a university administrator or librarian, you may see the future in open-access journal publishing and may be motivated to help bring that future about.1 I would urge you to establish or maintain an open-access fund to underwrite publication fees for open-access journals, but to do so in a way that follows the principles that underlie the Compact for Open-Access Publishing Equity (COPE). Those principles are two:

Principle 1: Our goal should be to establish an environment in which publishers are enabled2 to change their business model from the unsustainable closed access model based on reader-side fees to a sustainable open access model based on author-side fees.

If publishers could and did switch to the open-access business model, in the long term the moneys saved in reader-side fees would more than cover the author-side fees, with open access added to boot.

But until a large proportion of the funded research comes with appropriately structured funds usable to pay author-side fees, publishers will find themselves in an environment that disincentivizes the move to the preferred business model. Only when the bulk of research comes with funds to pay author-side fees underwriting dissemination will publishers feel comfortable moving to that model. Principle 1 argues for a system where author-side fees for open-access journals should be largely underwritten on behalf of authors, just as the research libraries of the world currently underwrite reader-side fees on behalf of readers.3 But who should be on the hook to pay the author-side fees on behalf of the authors? That brings us to Principle 2.

Principle 2: Dissemination is an intrinsic part of the research process. Those that fund the research should be responsible for funding its dissemination.

Research funding agencies, not universities, should be funding author-side fees for research funded by their grants. There’s no reason for universities to take on that burden on their behalf.4 But universities should fund open-access publication fees for research that they fund themselves.

We don’t usually think of universities as research funders, but they are. They hire faculty to engage in certain core activities – teaching, service, and research – and their job performance and career advancement typically depends on all three. Sometimes researchers obtain outside funding for the research aspect of their professional lives, but where research is not funded from outside, it is still a central part of faculty members’ responsibilities. In those cases, where research is not funded by extramural funds, it is therefore being implicitly funded by the university itself. In some fields, the sciences in particular, outside funding is the norm; in others, the humanities and most social sciences, it is the exception. Regardless of the field, faculty research that is not funded from outside is university-funded research, and the university ought to be responsible for funding its dissemination as well.

The university can and should place conditions on funding that dissemination. In particular, it ought to require that if it is funding the dissemination, then that dissemination be open – free for others to read and build on – and that it be published in a venue that provides openness sustainably – a fully open-access journal rather than a hybrid subscription journal.

Organizing a university open-access fund consistent with these principles means that the university will, at present, fund few articles, for reasons detailed elsewhere. Don’t confuse slow uptake with low impact. The import of the fund is not to be measured by how many articles it makes open, but by how it contributes to the establishment of the enabling environment for the open-access business model. The enabling environment will have to grow substantially before enablement becomes transformation. It is no less important in the interim.

What about the opportunity cost of open-access funds? Couldn’t those funds be better used in our efforts to move to a more open scholarly communication system? Alternative uses of the funds are sometimes proposed, such as university libraries establishing and operating new open-access journals or paying membership fees to open-access publishers to reduce the author-side fees for their journals. But establishing new journals does nothing to reduce the need to subscribe to the old journals. It adds costs with no anticipation, even in the long term, of corresponding savings elsewhere. And paying membership fees to certain open-access publishers puts a finger on the scale so as to preemptively favor certain such publishers over others and to let funding agencies off the hook for their funding responsibilities. Such efforts should at best be funded after open-access funds are established to make good on universities’ responsibility to underwrite the dissemination of the research they’ve funded.

- It should go without saying that efforts to foster open-access journal publishing are completely consistent with, in fact aided by, fostering open access through self-deposit in open repositories (so-called “green open access”). I am a long and ardent supporter of such efforts myself, and urge you as university administrators and librarians to promote green open access as well. [Since it should go without saying, comments recapitulating that point will be deemed tangential and attended to accordingly.]↩

- I am indebted to Bernard Schutz of Max Planck Gesellschaft for his elegant phrasing of the issue in terms of the “enabling environment”.↩

- Furthermore, as I’ve argued elsewhere, disenfranchising readers through subscription fees is a more fundamental problem than disenfranchising authors through publication fees.↩

- In fact, by being willing to fund author-side fees for grant-funded articles, universities merely delay the day that funding agencies do their part by reducing the pressure from their fundees.↩

Public underwriting of research and open access

April 4th, 2014

|

| …a social contract… Title page of the first octavo edition of Rousseau’s Social Contract |

[This post is based loosely on my comments on a panel on 2 April 2014 for Terry Fisher‘s CopyrightX course. Thanks to Terry for inviting me to participate and provoking this piece, and to my Berkman colleagues for their wonderful contributions to the panel session.]

Copyright is part of a social contract: You the author get a monopoly to exploit rights for a while in return for us the public gaining “the progress of Science and the Useful Arts”. The idea is that the direct financial benefit of exploiting those rights provides incentive for the author to create.

But this foundation for copyright ignores the fact that there are certain areas of creative expression in which direct financial benefit is not an incentive to create: in particular, academia. It’s not that academics who create and publish their research don’t need incentives, even financial incentives, to do so. Rather, the financial incentives are indirect. They receive no direct payment for the articles that they publish describing their research. They benefit instead from the personal uplift of contributing to human knowledge and seeing that knowledge advance science and the useful arts. Plus, their careers depend on the impact of their research, which is a result of its being widely read; it’s not all altruism.

In such cases, a different social contract can be in force without reducing creative expression. When the public underwrites the research that academics do – through direct research grants for instance – they can require in return that the research results must be made available to the public, without allowing for the limited period of exclusive exploitation. This is one of the arguments for the idea of open access to the scholarly literature. You see it in the Alliance for Taxpayer Access slogan “barrier-free access to taxpayer-funded research” and the White House statement that “The Obama Administration agrees that citizens deserve easy access to the results of research their tax dollars have paid for.” It is implemented in the NIH public access policy, requiring all articles funded by NIH grants to be made openly available through the PubMed Central website, where millions of visitors access millions of articles each week.

But here’s my point, one that is underappreciated even among open access supporters. The penetration of the notion of “taxpayer-funded research”, of “research their tax dollars have paid for”, is far greater than you might think. Yes, it includes research paid for by the $30 billion invested by the NIH each year, and the $7 billion research funded by the NSF, and the $150 million funded by the NEH. But all university research benefits from the social contract with taxpayers that makes universities tax-exempt.1

The Association of American Universities makes clear this social contract:

The educational purposes of universities and colleges – teaching, research, and public service – have been recognized in federal law as critical to the well-being of our democratic society. Higher education institutions are in turn exempted from income tax so they can make the most of their revenues…. Because of their tax exemption, universities and colleges are able to use more resources than would otherwise be available to fund: academic programs, student financial aid, research, public extension activities, and their overall operations.

It’s difficult to estimate the size of this form of support to universities. The best estimate I’ve seen puts it at something like $50 billion per year for the income tax exemption. That’s more than the NIH, NSF, and (hardly worth mentioning) the NEH put together. It’s on par with the total non-defense federal R&D funding.

And it’s not just exemption from income tax that universities benefit from. They also are exempt from property taxes for their campuses. Their contributors are exempt from tax for their charitable contributions to the university, which results ceteris paribus in larger donations. Their students are exempt from taxes on educational expenses. They receive government funding for scholarships, freeing up funds for research. Constructing an estimate of the total benefit to universities from all these sources is daunting. One study places the total value of all direct tax exemptions, federal, state, and local, for a single university, Northeastern University, at $97 million, accounting for well over half of all government support to the university. (Even this doesn’t count several of the items noted above.)

All university research, not just the grant-funded research, benefits from the taxpayer underwriting implicit in the tax exemption social contract. It would make sense then, in return, for taxpayers to require open access to all university research in return for continued tax-exempt status. Copyright is the citizenry paying authors with a monopoly in return for social benefit. But where the citizenry pays authors through some other mechanism, like $50 billion worth of tax exemption, it’s not a foregone conclusion that we should pay with the monopoly too.

Some people point out that just because the government funds something doesn’t mean that the public gets a free right of access. Indeed, the government funds various things that the public doesn’t get access to, or at least, not free access. The American Publisher’s Association points out, for instance, that although taxpayers pay for the national park system “they still have to pay a fee if they want to go in, and certainly if they want to camp.” On the other hand, you don’t pay when the fire department puts out a fire in your house, or to access the National Weather Service forecasts. It seems that the social contract is up for negotiation.

And that’s just the point. The social contract needs to be designed, and designed keeping in mind the properties of the goods being provided and the sustainability of the arrangement. In particular, funding of the contract can come from taxpayers or users or a combination of both. In the case of national parks, access to real estate is an inherently limited resource, and the benefit of access redounds primarily to the user (the visitor), so getting some of the income from visitors puts in place a reasonable market-based constraint.

Information goods are different. First, the benefits of access to information redound widely. Information begets information: researchers build on it, journalists report on it, products are based on it. The openness of NWS data means that farms can generate greater yields to benefit everyone (one part of the fourth of six goals in the NWS Strategic Plan). The openness of MBTA transit data means that a company can provide me with an iPhone app to tell me when my bus will arrive at my stop. Second, access to information is not an inherently limited resource. As Jefferson said, “He who receives an idea from me, receives instruction himself without lessening mine.” If access is to be restricted, it must be done artificially, through legal strictures or technological measures. The marginal cost of providing access to an academic article is, for all intents and purposes, zero. Thus, it makes more sense for the social contract around distributing research results to be funded exclusively from the taxpayer side rather than the user side, that is, funding agencies requiring completely free and open access for the articles they fund, and paying to underwrite the manifest costs of that access. (I’ve written in the past about the best way for funding agencies to organize that payment.)

It turns out that we, the public, are underwriting directly and indirectly every research article that our universities generate. Let’s think about what the social contract should provide us in return. Blind application of the copyright social contract would not be the likely outcome.

- Underappreciated by many, but as usual, not by Peter Suber, who anticipated this argument, for instance, in his seminal book Open Access:

All scholarly journals (toll access and OA) benefit from public subsidies. Most scientific research is funded by public agencies using public money, conducted and written up by researchers working at public institutions and paid with public money, and then peer-reviewed by faculty at public institutions and paid with public money. Even when researchers and peer reviewers work at private universities, their institutions are subsidized by publicly funded tax exemptions and tax-deductible donations. Most toll-access journal subscriptions are purchased by public institutions and paid with taxpayer money. [Emphasis added.]

A true transitional open-access business model

March 28th, 2014

|

| …provide a transition path… “The Temple of Transition, Burning Man 2011” photo by flickr user Michael Holden, used by permission |

David Willetts, the UK Minister for Universities and Research, has written a letter to Janet Finch responding to her committee’s “A Review of Progress in Implementing the Recommendations of the Finch Report”. Notable in Minister Willetts response is this excerpt:

Government wants [higher education institutions] to fully participate in the take up of Gold OA and create a better functioning market. Hence, Government looks to the publishing industry to develop innovative and sustainable solutions to address the ‘double-dipping’ issue perceived by institutions. Publishers have an opportunity to incentivise early adoption of Gold OA by moderating the total cost of publication for individual institutions. This would remove the final obstacle to greater take up of Gold OA, enabling universal acceptance of ‘hybrid’ journals.

It is important for two reasons: in its recognition, first, that the hybrid journal model has inherent obstacles as currently implemented (consistent with a previous post of mine), and second, that the solution is to make sure that individual institutions (as emphasized in the original) be properly incentivized for underwriting hybrid fees.

This development led me to dust off a pending post that has been sitting in my virtual filing cabinet for several years now, being updated every once in a while as developments motivated. It addresses exactly this issue in some detail.

A document scanning smartphone handle

March 13th, 2014

|

| …my solution to the problem… (Demonstrating the Scan-dle to my colleagues from the OSC over a beer in a local pub. Photo: Reinhard Engels) |

They are at the end of the gallery; retired to their tea and scandal, according to their ancient custom.

For a project that I am working on, I needed to scan some documents in one of the Harvard libraries. Smartphones are a boon for this kind of thing, since they are highly portable and now come with quite high-quality cameras. The iPhone 5 camera, for instance, has a resolution of 3,264 x 2,448, which comes to about 300 dpi scanning a letter-size sheet of paper, and a brightness depth of 8 bits per pixel provides an effective resolution much higher.

The downside of a smartphone, and any handheld camera, is the blurring that inevitably arises from camera shake when holding the camera and pressing the shutter release. True document scanners have a big advantage here. You could use a tripod, but dragging a tripod into the library is none too convenient, and staff may even disallow it, not to mention the expense of a tripod and smartphone tripod mount.

My solution to the problem of stabilizing my smartphone for document scanning purposes is a kind of document scanning smartphone handle that I’ve dubbed the Scan-dle. The stabilization that a Scan-dle provides dramatically improves the scanning ability of a smartphone, yet it’s cheap, portable, and unobtrusive.

The Scan-dle is essentially a triangular cross-section monopod made from foam board with a smartphone platform at the top. The angled base tilts the monopod so that the smartphone’s camera sees an empty area for the documents.[1] Judicious use of hook-and-loop fasteners allows the Scan-dle to fold small and flat in a couple of seconds.

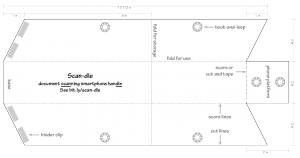

The plans at right show how the device is constructed. Cut from a sheet of foam board the shape indicated by the solid lines. (You can start by cutting out a 6″ x 13.5″ rectangle of board, then cutting out the bits at the four corners.)

The plans at right show how the device is constructed. Cut from a sheet of foam board the shape indicated by the solid lines. (You can start by cutting out a 6″ x 13.5″ rectangle of board, then cutting out the bits at the four corners.)  Then, along the dotted lines, carefully cut through the top paper and foam but not the bottom layer of paper. This allows the board to fold along these lines. (I recommend adding a layer of clear packaging tape along these lines on the uncut side for reinforcement.) Place four small binder clips along the bottom where indicated; these provide a flatter, more stable base. Stick on six 3/4″ hook-and-loop squares where indicated, and cut two 2.5″ pieces of 3/4″ hook-and-loop tape.

Then, along the dotted lines, carefully cut through the top paper and foam but not the bottom layer of paper. This allows the board to fold along these lines. (I recommend adding a layer of clear packaging tape along these lines on the uncut side for reinforcement.) Place four small binder clips along the bottom where indicated; these provide a flatter, more stable base. Stick on six 3/4″ hook-and-loop squares where indicated, and cut two 2.5″ pieces of 3/4″ hook-and-loop tape.

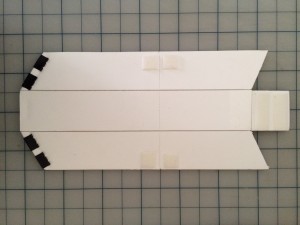

When the board is folded along the “fold for storage” line (see image at left), you can use the tape pieces to hold it closed and flat for storage.

When the board is folded along the “fold for storage” line (see image at left), you can use the tape pieces to hold it closed and flat for storage.  When the board is folded along the two “fold for use” lines (see image at right), the same tape serves to hold the board together into its triangular cross section. Hook-and-loop squares applied to a smartphone case hold the phone to the platform.

When the board is folded along the two “fold for use” lines (see image at right), the same tape serves to hold the board together into its triangular cross section. Hook-and-loop squares applied to a smartphone case hold the phone to the platform.

To use the Scan-dle, hold the base to a desk with one hand and operate the camera’s shutter release with the other, as shown in the video below. An additional trick for iPhone users is to use the volume buttons on a set of earbuds as a shutter release for the iPhone camera, further reducing camera shake.

The Scan-dle has several nice properties:

- It is made from readily available and inexpensive materials. I estimate that the cost of the materials used in a single Scan-dle is less than $10, of which about half is the iPhone case. In my case, I had everything I needed at home, so my incremental cost was $0.

- It is extremely portable. It folds flat to 6″ x 7″ x .5″, and easily fits in a backpack or handbag.

- It sets up and breaks down quickly. It switches between its flat state and ready-to-go in about five seconds.

- It is quite sufficient for stabilizing the smartphone.

The scanning area covered by a Scan-dle is about 8″ x 11″, just shy of a letter-size sheet. Of course, you can easily change the device’s height in the plans to increase that area. But I prefer to leave it short, which improves the resolution in scanning smaller pages. When a larger area is needed you can simply set the base of the Scan-dle on a book or two. Adding just 1.5″ to the height of the Scan-dle gives you coverage of about 10″ x 14″. By the way, after you’ve offloaded the photos onto your computer, programs like the freely available Scantailor can do a wonderful job of splitting, deskewing, and cropping the pages if you’d like.

Let me know in the comments section if you build a Scan-dle and how it works for you, especially if you come up with any use tips or design improvements.

Materials:

(Links are for reference only; no need to buy in these quantities.)

- foam board

- double-sided Velcro tape

- sticky-back Velcro coins

- clear packaging tape

- 3/4″ binder clips

- iPhone case

The design bears a resemblance to a 2011 Kickstarter-funded document scanner attachment called the Scandy, though there are several differences. The Scandy was a telescoping tube that attached with a vise mount to a desk; the Scan-dle simplifies by using the operator’s hand as the mount. The Scandy’s telescoping tube allowed the scan area to be sized to the document; the Scan-dle must be rested on some books to increase the scan area. Because of its solid construction, the Scandy was undoubtedly slightly heavier and bulkier than the Scan-dle. The Scandy cost some €40 ($55); the Scan-dle comes in at a fraction of that. Finally, the Scandy seems no longer to be available; the open-source Scan-dle never varies in its availability. ↩

The design bears a resemblance to a 2011 Kickstarter-funded document scanner attachment called the Scandy, though there are several differences. The Scandy was a telescoping tube that attached with a vise mount to a desk; the Scan-dle simplifies by using the operator’s hand as the mount. The Scandy’s telescoping tube allowed the scan area to be sized to the document; the Scan-dle must be rested on some books to increase the scan area. Because of its solid construction, the Scandy was undoubtedly slightly heavier and bulkier than the Scan-dle. The Scandy cost some €40 ($55); the Scan-dle comes in at a fraction of that. Finally, the Scandy seems no longer to be available; the open-source Scan-dle never varies in its availability. ↩

A model OA journal publication agreement

February 19th, 2014

|

| …decided to write my own… |

In a previous post, I proposed that open-access journals use the CC-BY license for their scholar-contributed articles:

As long as you’re founding a new journal, its contents should be as open as possible consistent with appropriate attribution. That exactly characterizes the CC-BY license. It’s also a tremendously simple approach. Once the author grants a CC-BY license, no further rights need be granted to the publisher. There’s no need for talk about granting the publisher a nonexclusive license to publish the article, etc., etc. The CC-BY license already allows the publisher to do so. There’s no need to talk about what rights the author retains, since the author retains all rights subject to the nonexclusive CC-BY license. I’ve made the case for a CC-BY license at length elsewhere.

Recently, a journal asked me how to go about doing just that. What should their publication agreement look like? It was a fair question, and one I didn’t have a ready answer for. The “Online Guide to Open Access Journals Publishing” provides a template agreement that is refreshingly minimalist, but by my lights misses some important aspects. I looked around at various journals to see what they did, but didn’t find any agreements that seemed ideal either. So I decided to write my own. Herewith is my proposal for a model OA publication agreement. Read the rest of this entry »